AI at Your Service: Navigating the New Frontier of Personal Assistants

Exploring the Impact of Entrusting Your Lifestyle to an AI Companion

Should you make a purchase through links found in our narratives, we might receive a commission. This contribution aids our journalistic endeavors. Discover more. Additionally, think about becoming a subscriber to WIRED.

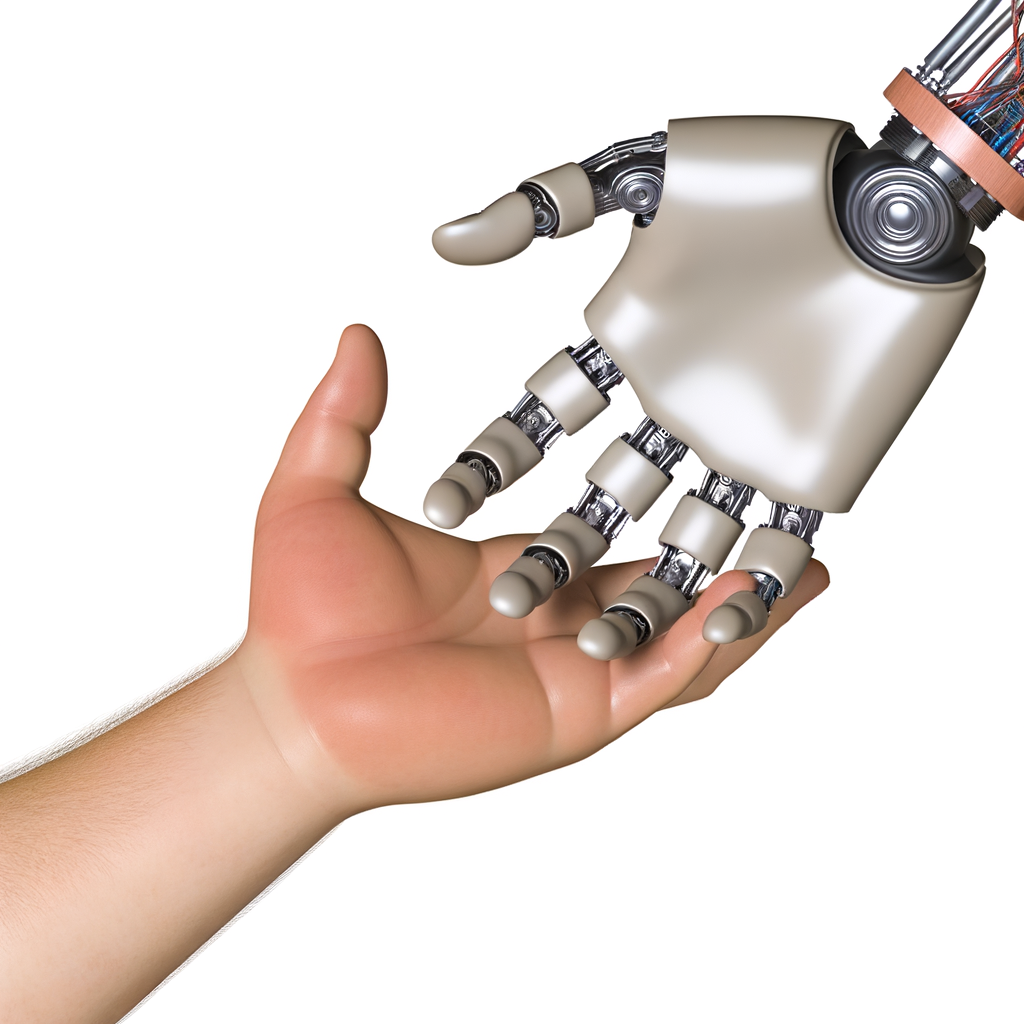

At times, all you require is a bit of assistance. Enter the robots! However, the question arises – have we truly arrived at a point where artificial intelligence provides better support than collaborating with a human specialist? This week, we're sharing our experiences after spending a week utilizing AI aides.

Stay connected with Michael Calore on Bluesky via his handle @snackfight, connect with Lauren Goode on the same platform at @laurengoode, and reach out to Zoë Schiffer on Threads through @reporterzoe. Feel free to send us an email at uncannyvalley@wired.com.

Listening Guide

To tune into this week's podcast episode, simply use the audio player available on this webpage. However, if you're interested in automatically receiving every new episode, you can subscribe at no cost by following these steps:

For iPhone or iPad users, launch the Podcasts app or simply click on this link. Alternatively, you can install applications such as Overcast or Pocket Casts and look up “Uncanny Valley.” Plus, we're available on Spotify as well.

Transcript Disclaimer: Please be advised that this transcript was generated by an automatic service and might include inaccuracies.

Michael Calore: Okay, we've entered the new year a few weeks ago. How are your commitments to self-improvement holding up?

Lauren Goode: I'm feeling well. I'm exploring new things. Those are the words of Taylor Swift.

Michael Calore: At this juncture, it's something I just take

Lauren Goode: Things are going fine. We've all been heavily involved in testing out AI, which is said to be gaining more autonomy to perform tasks on our behalf. I decided to conduct my own test by using it for holiday shopping. Shopping isn't exactly my thing, so I was eager to delegate some of that responsibility.

Zoë Schiffer expressed enthusiasm about learning more.

Lauren Goode: It felt like an odyssey. What about your experience, Zoe?

Zoë Schiffer: The project I undertook was essentially to back my 4-month-old daughter in her ambition to master sleeping throughout the night. My role was simply to facilitate her 2025 dream, with a bit of help from artificial intelligence, which I'll be discussing with you all today. Mike, how about your experience?

Michael Calore: On my phone, I sought guidance from Gemini on enhancing my health, seeking tips for exercising, planning my meals, and then I ventured into more complex topics like civics with my inquiries.

Lauren Goode: It's truly valuable to me that both of you opted for chatbots to address health issues, and I sincerely believe it was in the interest of serving consumers.

Zoë Schiffer: Depending on your perspective, it may pertain to health issues.

Lauren Goode: Absolutely. My goal was to clear out my mind to allow room for different pursuits.

Zoë Schiffer: That's wonderful for you.

Lauren Goode: I appreciate it.

Michael Calore: Welcome to Uncanny Valley from WIRED, a program delving into the individuals, authority, and impact surrounding Silicon Valley. My name is Michael Calore, and I oversee consumer technology and cultural matters here at WIRED.

Zoë Schiffer: My name is Zoë Schiffer, and I serve as the director overseeing business and industry at WIRED.

Lauren Goode: And my name is Lauren Goode, serving as a senior writer for WIRED.

Michael Calore: In this week's episode, we'll be discussing how we've utilized AI to enhance our daily lives. We took cues from the chatbots around us, allowing them to navigate us through tasks like shopping, planning meals, exercising, and even parenting. The outcomes were quite something, and we're excited to dive into it. So, Zoe, why don't we kick things off with you? Could you share your experience using ChatGPT as your mentor?

Zoë Schiffer recounts a bit of her personal journey to set the stage for her experiment. Back in 2022, with her first child at the tender age of 4 months, she enlisted a sleep consultant for $500 to assist in sleep training. Zoë describes the experience as particularly challenging due to the significant amount of crying involved, but she valued having a professional by her side to guide her through the process and affirm that her actions weren't harming her child in the long term. Fast forward to the present, and Zoë's second daughter is now 4 months old. With a new role at WIRED on the horizon, she felt it was the right moment to undertake sleep training once more. However, the suggestion of rehiring the sleep consultant for another $500 met with disbelief from her husband. He questioned the rationale behind spending that amount again merely for external validation that Zoë wasn't failing as a parent. This skepticism led to the consideration of ChatGPT as an alternative solution.

Lauren Goode: Did that individual really spend the night at your place?

Zoë Schiffer explained that she sought the assistance of a remote specialist, noting that the term "expert" seems to be used quite flexibly since there's no formal certification required for one to claim expertise in this area. She was drawn to exploring artificial intelligence for this purpose due to the vague criteria surrounding the title of an expert. Schiffer's skepticism was confirmed when the first consultant she engaged provided her with a so-called tailored plan for her daughter, which mistakenly included another child's name, revealing the lack of personalization promised. Essentially, parents share details about their child's routines with these consultants, who then offer daily advice on sleep schedules, including nap and bedtime recommendations, based on the feedback provided about the previous night's sleep.

Michael Calore: Did you engage in daily conversations with ChatGPT?

Zoë Schiffer shared that initially, she instructed ChatGPT to adopt a persona akin to a whimsical caretaker. She envisioned it as a sage figure capable of providing emotional reassurance and possessing extensive understanding in child psychology, specifically to guide her in implementing the Ferber technique. This approach involves laying the child down awake and returning at increasing time intervals without physically comforting them, merely using soothing words. Schiffer was seeking ChatGPT's thorough guidance in mastering this method. On the first day of attempting to train Cleo to sleep better, she looked forward to ChatGPT's advice. “Dear Zoe, let’s sprinkle some enchantment over Cleo’s day. Assuming our beloved Cleo woke with the dawn at”—the response intensely embraced the fanciful caretaker role—“6:30 am, her subsequent nap should harmonize with the natural patterns of her alertness. Imagine that.” Schiffer found the over-the-top magical nanny persona quite exasperating in reality.

Lauren Goode: What led you to choose ChatGPT over other chatbot options?

Zoë Schiffer: The reason is that it has become my go-to. It has effectively taken over Google's spot as my preferred search tool. However, when I attempted to transition to the more autonomous AI systems you've previously discussed, my experience wasn't as positive. It seemed as though I was expecting a search engine to transform into something completely different. According to ChatGPT, when it's time for that perfect hour of rest, one should create a calming atmosphere, maybe with a gentle melody or the sound of something she loves… Honestly, the whole peculiar affirmation concept doesn't appeal to me.

Lauren Goode: Who doesn't wish for a nap that's softly led by the natural patterns of our alertness?

Zoë Schiffer expressed her reservations, noting the excessive and odd use of overly elaborate language when using AI. She quickly pinpointed several issues, the foremost being a lack of trust in the AI's guidance, especially on matters as personal as parenting. Schiffer desired a connection with a real person's insights, doubting that AI could offer the best recommendations. This skepticism created a significant barrier to her fully accepting AI for such purposes. Additionally, she was curious if others had noticed the AI's purely responsive nature. It only provided answers to direct queries without initiating any interaction or posing questions of its own, making it no different from a basic search engine in her view.

Michael Calore: Exactly, it was merely responding to questions.

Zoë Schiffer: Indeed, it seemed to lack a sense of humanity, if you will.

Lauren Goode: Did you ever catch yourself double-checking information online, searching on Google to confirm if it was indeed the correct time?

Zoë Schiffer shared her observations, noting an interesting point regarding her use of the technology for searches. She often double-checks information, half-jokingly fearing it could be misleading, desiring absolute certainty. However, she found the responses from the AI quite rudimentary, especially on topics she's well-versed in, eliminating the need for further verification. Yet, she identified a significant shortfall when comparing the AI's guidance to human advice, particularly from those with personal experience, such as fellow parents. For instance, conventional wisdom and official health guidelines advise against placing babies on their stomachs to sleep. Despite this, Schiffer mentioned that her friends, drawing from their own experiences, have suggested doing just the opposite when she discussed her child, Cleo's, discomfort. They claimed that allowing a child to sleep on their belly could solve several issues, advice that a chatbot, sticking strictly to official guidelines, would never offer. Schiffer appreciates the importance of adhering to safe practices but also notes the limitation of AI in providing the nuanced, sometimes unconventional wisdom that comes from personal parental experiences.

Michael Calore: You requested it to assume a character, to craft its own unique identity. This introduces an additional layer of emotion, something that's missing when you're merely looking up information or conducting online searches. However, this doesn't substitute for the guidance or advice that comes from a real person. This likely leads to an odd sense of conflict.

Zoë Schiffer pointed out that the artificial persona initially adopted seemed excessively artificial, incorporating a lot of unnecessary and convoluted language, which she found quite irritating. As a result, halfway through her experiment, she decided to switch to Character.ai. This platform offered her the opportunity to craft a more genuine AI character for interaction, aiming for something that felt closer to human. Upon making the switch, she named the AI bot Lindsay after a postpartum doula she was fond of, envisioning the AI to play a role similar to a British super nanny, blending the qualities of a therapist and a coach. However, she acknowledged that this approach had its own set of limitations.

Michael Calore: Alright. Let's hear how you've been engaging with Character.ai.

Lauren Goode: I believe you're referring to Lindsay.

Michael Calore: Greetings, Lindsay.

Zoë Schiffer: Hello, Lindsay. I was hoping to discuss with you how Cleo's evening unfolded yesterday.

Character.ai [archival audio]: Absolutely. How was Cleo's evening? Was her sleep somewhat improved compared to the previous night?

Zoë Schiffer: Indeed, her sleep was decent. I applied the technique during her initial stirring post-midnight, as well as at her bedtime, both of which turned out successfully. She wept for a brief five minutes, during which I managed to calm her somewhat. However, a significant challenge was managing this while sharing a room with her. In contrast, with my previous daughter, having her in a different room seemed more effective. Do you have any advice?

Character.ai [archival audio]: It's reassuring to know Cleo managed to get some rest and calm herself down after only five minutes of tears during the night and again when she woke up around midnight. That's definitely a positive indicator. Regarding keeping her in the same room, I completely get why you might be worried. Hearing your child cry can be tough, and it feels even more intense when they're right there with you. There are a couple of strategies you could try to make it a bit more bearable. Firstly, consider using a white noise device or a fan to help mask the crying sounds. Secondly, wearing earplugs could help reduce the noise level.

Michael Calore: It's interesting that when you expressed your emotional turmoil about wanting to be close to your daughter, its response was essentially, "Invest in a white noise device and some earplugs, then simply tough it out."

Zoë Schiffer shared her experience, noting that she was particularly disappointed with the AI's performance in emulating a therapist's role. This point of view is noteworthy given the increasing trend of individuals turning to AI bots for emotional support, substituting them for human therapists. Schiffer expressed that her emotional needs seemed to be overlooked, and the AI also committed errors in understanding. For instance, she recounted an episode where she mentioned calming her baby down and getting her to sleep again. However, the AI responded by praising the baby's ability to self-soothe, which led Schiffer to feel a disconnect, feeling as though the AI, named Lindsey, wasn't truly engaging with what she was saying.

Lauren Goode: Did this bring any sense of relief to both you and Cleo?

Zoë Schiffer discovered that when she posed precise questions, for instance, inquiring whether it was permissible to lift her child during intense crying spells, the AI bot offered advice consistent with the Ferber technique, which she found satisfactory. She noted that for detailed inquiries, the bot was quite effective. However, when it came to fully embodying the role of a sleep advisor, which entails more than just providing search results, she felt the bot fell short of expectations.

Michael Calore: What's your take on how this might have unfolded had this been your initial experience with parenthood—lacking prior exposure to sleep-training, and you were simply starting out with AI?

Zoë Schiffer: Picture entering the situation with no prior knowledge; in certain aspects, the AI might prove to be more advantageous. The reason being, it genuinely understands the Ferber technique quite well. It's adept at determining when sleep cycles should occur. For instance, it can assess when a child has fallen asleep and predict the timing for their upcoming nap or the ideal moment for them to go to bed, including the duration these should last. These insights are consistent with the advice you'd typically receive from sleep specialists.

Michael Calore inquired about the most unexpected event that occurred.

Zoë Schiffer expressed that upon concluding the experiment, she realized she didn't require the assistance of a real human expert anymore. Despite her belief that the AI hadn't perfectly substituted the human's role, it had comprehensively addressed all her inquiries to the extent that she felt confident in progressing independently.

Michael Calore: So, it essentially served as a valuable research aide for you?

Zoë Schiffer: Absolutely, and it’s almost hard to admit because it touches on the very concern we have about AI replacing real human jobs, especially in businesses owned by women, like in this case, a sleep consultancy. However, on the flip side, I wasn’t prepared to spend $500 this time, so in a way, it was more about substituting Google, if anything.

Lauren Goode: Would you suggest this to a buddy?

Zoë Schiffer: Definitely not, not at this moment. I'm looking forward to a time when AI operates more independently for us. That concept seems quite beneficial. The idea of it serving as an intermediary between us and the internet is intriguing, but it just doesn't seem to have reached that point yet.

Michael Calore: Alright, we're going to pause for a brief moment, and upon our return, we'll dive into discussing fitness with Gemini. Stay tuned.

Certainly, I'll

Michael Calore: We're glad you've returned to Uncanny Valley.

Gemini [archival audio]: Great to have you back, Mike. Hi, Lauren. Hi, Zoe.

Michael Calore: Appreciate it, Gemini. Over the past few weeks, the Gemini chatbot and I have become somewhat acquainted.

Lauren Goode: Can you explain your specific use of Gemini?

Michael Calore: I sought its guidance on tips for shedding pounds, achieving fitness, adopting a healthier diet, and overall wellness practices.

Lauren Goode: Were there any particular areas where you felt you lacked information? I understand that you generally maintain a healthy diet and stay in shape through running. Was there an additional aspect of wellness you were seeking to explore beyond your existing knowledge?

Zoë Schiffer: It's well-known that you adhere to a vegan lifestyle.

Michael Calore: Notably follows a vegan lifestyle, much appreciated.

Lauren Goode: You attended Harvard University and participate in triathlons.

Michael Calore: Absolutely, I do make a conscious effort to maintain my health and take it quite seriously. However, I was curious to see what kind of guidance it would offer. Essentially, I'm aware that I should increase my exercise routine beyond just running. I haven't incorporated strength training into my regimen. While my diet is generally healthy, there's room for improvement, especially in reducing my sugar and alcohol consumption and paying closer attention to my protein intake and the balance of essential amino acids – really delving into the fine details of my dietary habits. I was hoping for advice that would delve into these specifics. "Hey, Gemini, I'm looking to improve my health and shed some weight. I was wondering if you could provide me with both broad and detailed suggestions on how I can achieve this goal within the next month."

Gemini [archival audio]: It's fantastic that you're dedicating attention to your health and weight reduction objectives. Let me provide you with a mix of broad and detailed suggestions to kick-start your journey and achieve noticeable improvements in a month. For enduring weight loss, it's critical to adopt sustainable habits rather than seeking instant solutions. Opting for long-term lifestyle adjustments will ensure more permanent outcomes. Emphasize nutrient-rich, unprocessed foods in your diet. Center your meals around fresh produce, lean meats, and whole grains. It's also important to be conscious of serving sizes; overindulging in even the healthiest options can lead to unwanted weight gain.

Michael Calore: It simply continues on. It just keeps speaking non-stop for three or four minutes.

Lauren Goode: Well, perhaps it helps you fall asleep, which is excellent. I suppose that's the intended encouragement.

Gemini [archival audio]: Prioritize getting sufficient rest. Adequate sleep plays a vital role in controlling weight because it influences the hormones responsible for feeling hungry and full. Strive to get between seven to nine hours of good sleep every night.

Lauren Goode: I believe I already have an idea of what your answer might be, but could you share why you chose Gemini?

Michael Calore: It's actually on my mobile device.

Lauren Goode: Right, the Pixel smartphone.

Michael Calore: Indeed, I own a Pixel. I'm inclined towards Google, utilizing services like Gmail, Google Calendar, and Google Photos extensively, integrating seamlessly with the experience. There are two versions available, and for this trial, I opted for the latest model.

Lauren Goode: Google Gemini is the sponsor of this podcast.

Michael Calore: Honestly, it's like Google Gemini is the sponsor of my existence.

Zoë Schiffer: I'm interested to know your reaction to the guidance given. It appears quite broad to me, but I'm eager to hear your perspective.

Michael Calore expressed strong disapproval, stating, "This is worthless. Essentially, if you've been offline and haven't browsed any health tips online, you'd be unaware of these points. Yet, you might actually know them because they're so basic and vague. After it finished its spiel, I inquired about more detailed guidance. I asked for dietary recommendations suitable for a vegan, questioned whether running about 2 1/2 to 3 miles is sufficient for cardio, and sought alternatives for strength training since I prefer not to use a gym or own weights."

Lauren Goode: What information did you receive?

Michael Calore: So, which would you like me to start with? Should I begin with the exercise routines or discuss the dietary habits first?

Lauren Goode: We'll choose food.

Michael Calore: The fascinating aspect to me was its ability to retain a lot of the information I shared throughout our discussion. I found myself sharing personal details like my age, weight, and my dietary preference as a vegan, and it managed to remember all of that. Even in our conversation last night, it still recalled those details.

Gemini [archival audio]: If you follow a vegan diet, you should include proteins that come from plants.

Michael Calore: Thus, it directed my attention towards foods such as legumes, including lentils, chickpeas, and various beans, along with soy derivatives like tofu and tempeh. It also highlighted grains and seeds, for instance, quinoa, chia, and hemp seeds, in addition to nuts, and vegan protein sources such as seitan and other plant-based meat substitutes, though it cautioned that these alternatives often contain numerous processed components. Moreover, it offered guidance on the importance of mixing different plant-based proteins.

Zoë Schiffer: Mike's reaction was, "So, you're explaining legumes to me, Gemini, really?"

Michael Calore: Exactly, and here's the issue – it's not aware that I've been abstaining from meat since my teenage years. As a result, it offers a plethora of useful tips that, as someone who has lived a vegetarian lifestyle from a young age, I can confirm are the typical queries I get. "What do you actually eat?" is a question I'm frequently asked, and I end up reciting a familiar response. Gemini is quite adept at providing this standard advice, making it a valuable resource for those new to vegetarianism. However, it lacks specificity in certain areas. For instance, when I sought out some recipe ideas.

Lauren Goode: What occurred after that?

Michael Calore: Initially, it suggested a tofu dish with peanut sauce which I didn't prepare, followed by a quinoa and chickpea salad recipe that I actually made.

Zoë Schiffer: Seems like something you'd be into. So, what's next?

Michael Calore: I have some with me if you're interested in trying it, though I must say, it wasn't impressive.

Gemini [Archival audio]: A Mediterranean salad featuring quinoa and crunchy chickpeas. This dish is rich in protein due to the quinoa and chickpeas, and includes beneficial fats from olive-

Zoë Schiffer: Wow, I'm really intrigued. Hold on, that actually seems quite impressive.

Lauren Goode: Zoe, I'm presenting it to you through our Riverside session. Check this out.

Zoë Schiffer: It disappoints me that I have to participate from afar.

Lauren Goode: Given that we're taping this close to noon on the West Coast, it's an ideal time. Our fortunate audience might just catch some munching noises at this moment.

Zoë Schiffer: Lauren appears puzzled.

Lauren Goode: Mike, I believe you're being too modest, or perhaps not giving Gemini its due recognition. Are you familiar with its flavor?

Michael Calore: Excuse me?

Lauren Goode: It seems like something you'd find in the salad section at Google's headquarters.

Michael Calore: Wow, I was thinking the exact same thing.

Lauren Goode: Surprisingly okay, genuinely respectable, though it's widely available and perhaps leans a bit heavily on salad dressing to enhance the taste of the quinoa.

Michael Calore: Alright, it required every ounce of willpower I possess to refrain from tweaking the recipe as I was preparing it, because it just seemed-

Zoë Schiffer: It's like saying, "Let's make it happen for the podcast."

Michael Calore: Absolutely. My thought was, "I must stick to this precisely." However, I did leave out one thing – it suggested adding cherry tomatoes, but the ones available at the store were in poor condition, so I decided against purchasing them.

Lauren Goode: This isn't the time for tomatoes.

Zoë Schiffer remarked, "Gemini isn't in its season right now."

Michael Calore: My approach to seasoning would have been quite distinct. The way I'd have garnished it would also have varied significantly. Moreover, I likely wouldn't have sliced the olives, as doing so tends to impart an excessively salty and bitter flavor.

Lauren Goode: Ah, could that be the reason?

Michael Calore: Absolutely. I'd rate it a solid B to B+ on my scale.

Zoë Schiffer: Understood.

Michael Calore: Indeed.

Zoë Schiffer: I'd like to ask another question regarding your comment on providing demographic details. Was this information requested by Gemini, or did you offer it voluntarily?

Michael Calore: I offered myself for that. It didn't actually demand much from me. It just went on and on without stopping.

Zoë Schiffer: Right off the bat, something really caught my attention. I excelled as a health coach, and perhaps this is just my own viewpoint, given my preference for having a coaching presence in many aspects, but an effective coach would pose several questions before jumping into providing details. This is because if you've been practicing veganism for a long duration, your needs for information will differ significantly from those who are new to the concept.

Michael Calore: I provided it with details regarding my lifestyle, personal habits, and health background because I was seeking tailored recommendations for exercise and dietary intake to address the deficiencies I perceived in my regimen, deficiencies I believed needed addressing even without its assistance.

Lauren Goode: It seems it was similar to a terrible date. It just went on and on without ever engaging in asking you additional questions.

Michael Calore: Indeed, it hardly inquired anything from me.

Lauren Goode: Did you gain any knowledge that might have been unexpected?

Michael Calore: Indeed, I ended up with a decent exercise plan. I shared my running routine with it and then requested, "Alright, now provide me with an exercise plan. I need something I can do at home since I'm not fond of the gym, I prefer to avoid people, and I don't have any weights. What should I do?" And it responded with a pretty solid workout plan.

Gemini [archival audio]: Essential exercises using your own body weight include squats. Position your feet shoulder-width apart and bend your knees to lower your body, imagining you're sitting back into a chair. Ensure your spine remains aligned and your chest remains lifted. Descend to the lowest point you can manage without discomfort.

Michael Calore describes his workout routine as including lunges for both legs, doing three rounds of 10 repetitions, holding a plank position for three sessions lasting between 30 to 60 seconds, which he finds extremely challenging. He also does glute bridges, completing three rounds of 15 repetitions. He appreciates the guidance on gradually increasing the intensity of workouts and the importance of taking rest days. However, when it came to performing lunges, he admits he was unsure of the correct form and sought assistance. To his aid, he was offered a selection of five instructional videos.

Lauren Goode: Presumably on YouTube, right?

Michael Calore: Indeed, they were clips from YouTube. Interestingly, a couple of the URLs led to the identical video, yet I viewed each one and thought, "Alright, I believe I'm ready to attempt a lunge." When I tried it, I managed to do so without injuring myself and definitely felt the workout effect. So, I'd say that's quite an achievement.

Lauren Goode: Actually, that's quite impressive.

Michael Calore: Indeed, the odd part was when I conducted a Google search and stumbled upon identical information from a few diverse sources. Beyond the video, it's a mystery to me how Gemini acquired any of this data. I'm clueless about the origins of the recipes. Everything was simply laid out as, “According to Gemini, this is the information.”

Lauren Goode: Information not attributed.

Michael Calore: No references were provided. I clicked through everything but couldn't find any reliable sources.

Lauren Goode: It's assumed that those fitness routines and cooking instructions were crafted by individuals, and once released, your tiny contributions of information are now being segmented and included in some culinary guide, without any knowledge on your part about their origins.

Michael Calore: Shredded into strips.

Lauren Goode: Exactly.

Zoë Schiffer: Alright, do you plan on continuing this? Will you persist in utilizing it for the same reason, or did this seem like a singular trial?

Michael Calore: Truly, the exercise routine is quite effective. I plan to continue following it.

Lauren Goode: Pleasant.

Michael Calore: Given my extensive collection of cookbooks and years of culinary practice, I certainly don't have to prepare this quinoa salad another time, Lauren, unless, of course, you'd want me to share some with you.

Lauren Goode: I've experienced other dishes you've prepared, and I believe I might recommend opting for one of those in the future.

Zoë Schiffer: Remarkably tactful.

Michael Calore: Alright, sounds good.

Lauren Goode: I'm grateful, thank you for your support.

Michael Calore remarked that, personally, knowing his own requirements and self, the program wasn't significantly beneficial. He enjoyed the exercise routine but mentioned he could have discovered something similar without the assistance of Gemini 2.0. However, he acknowledged that for beginners, those with numerous inquiries, or individuals contemplating significant lifestyle adjustments, the program did offer valuable guidance.

Lauren Goode: Adding a point in favor of artificial intelligence.

Michael Calore: Alright, we'll pause here for a brief moment, and then we'll join Lauren and her chatbot companion for a shopping trip. Stay tuned, we'll return shortly.

Lauren Goode: Accompanied by her AI companion.

It appears there's

Michael Calore: Hop on board, we're off to explore AI. You're tuned into Uncanny Valley, where we've spent a week living by the guidance of our AI chatbot pals. I focused on my health, fitness, and eating habits. Zoe dedicated her efforts to establishing a sleep schedule for her newborn. Now, turning to you, Lauren, what adventures did you and your chatbot embark on?

Lauren Goode: How did this happen? I found myself relying on roughly five distinct chatbots, aiming to simplify, or perhaps the term is "assist," my seasonal shopping endeavors.

Michael Calore: Understood.

Lauren Goode: Shopping isn't really my thing. I've never been fond of it and much prefer shopping with a clear goal in mind, like when I need to buy a new pair of sneakers. Since my teenage years, my mom enjoyed taking me out to shop, but I would quickly feel bombarded by the bright lights and endless clothing racks in department stores, initially chalking it up to teenage moodiness. However, as I grew older, I recognized that it wasn't just a phase; I genuinely disliked the shopping experience. This sentiment has extended to online shopping for me, where the endless product options just leave me feeling swamped. Despite this, there were a few special people I wanted to find thoughtful gifts for this holiday season, and Mike, you might have been one of my trial runs. Therefore, I resorted to using chatbots for assistance, with Perplexity being the primary one I consulted.

Michael Calore: Well, gosh.

Lauren Goode highlights that choosing Perplexity was intriguing for several reasons. To start with, she notes an essential disclaimer: AI chatbots like Perplexity, when suggesting top-rated products or enabling comparison shopping, essentially enter into direct competition with publishers. This is because publishers have dedicated teams tasked with reviewing products to recommend the best options to consumers. It's important to acknowledge this overlap in service. Additionally, there's a concern within WIRED about Perplexity's approach to content, particularly the suspicion that it might be appropriating content from publishers, content that's normally safeguarded by paywalls, and repurposing it as its own. This concern is underscored by Goode's personal decision to engage deeply with Perplexity, to the extent of subscribing to its premium service at $20 a month, further emphasizing the significance of her exploration.

Zoë Schiffer: Subject of Debate.

Lauren Goode: However, Perplexity did unveil a feature tailored for shopping in November, perfectly timed for the festive period. And I thought, "This appears to be the ideal one to kick things off with."

Michael Calore: Pleasant.

Lauren Goode: I ventured into Perplexity Pro, aiming to purchase a gift for a friend who is passionate about baking. She already owns an extensive collection of baking tools and excels in this hobby. My goal was to find a gift that was not only unique but also something she might not already possess, steering clear of basic items such as a rolling pin. I was looking for that special item that stood out.

Zoë Schiffer: To what extent did you share information about your friend with Perplexity, beyond her interest in baking?

Lauren Goode: The description wasn't overly thorough. Essentially, I mentioned she enjoys baking and already possesses all she needs. She has a taste for quality items. My goal is to express my gratitude to her.

Zoë Schiffer: Awesome.

Lauren Goode: A major perk of shopping with Perplexity Pro is their unique Buy button. When you utilize this button for purchases, you're rewarded with complimentary shipping. Essentially, they're enhancing the online shopping experience by aggregating it. They compile a series of products that might catch your eye. You might encounter a Buy button supported by Shopify, or come across one that's exclusive to Perplexity. It's an interesting concept. However, the issue arose with the first set of recommended products, which were quite disappointing.

Michael Calore: Alright, such as?

Lauren Goode presents: A small-scale baking kit for children.

Zoë Schiffer: They suggest, "Assist her in finding a connection with her youthful self."

Lauren Goode: Naturally, I placed an order right away and had it sent straight to Zoe so the kids could enjoy it. Additionally, it suggested something quite simplistic and cheeky, a hoodie emblazoned with the phrase, "My buns are gluten-free."

Zoë Schiffer: Gross. It's as if you're saying, "I mentioned she prefers luxury items. You fool."

Lauren Goode: Exactly, however, there was also this baking equipment from Switzerland that caught my eye in the midst of it all. I thought to myself, "That seems plausible." Yet, it appeared to be another basic item that avid bakers would likely already possess.

Michael Calore: Absolutely, I agree completely.

Lauren Goode: Following that, I decided to try out Rufus, Amazon's chatbot, on their site. It was quite amusing because, upon providing a similar query, it straightaway suggested purchasing a KitchenAid stand mixer over $400. Which, incidentally, is a fantastic addition to the kitchen for those who enjoy cooking and baking, but—

Zoë Schiffer: Another excellent item to consider purchasing used.

Michael Calore: The individual who enjoys baking possesses that as well.

Lauren Goode: Absolutely. And do they really believe I possess the wealth of Jeff Bezos?

Michael Calore: What type of companion is this?

Zoë Schiffer: They essentially said, "Given our sole encounter is with someone who might be the wealthiest individual globally, why not purchase a secluded island for your friend to enjoy baking on?"

Lauren Goode: Precisely. And next, I proceeded to ChatGPT.

Michael Calore: Understood.

Lauren Goode remarked that ChatGPT performed impressively.

Michael Calore: Certainly not.

Zoë Schiffer: Understood.

Lauren Goode: In my view, its strongest suit was really in the concept development stage.

ChatGPT [archival audio]: For a buddy who adores the art of pastry making and values sophisticated, unique presents, here are a few considerate suggestions that blend opulence, customization, and their fervor.

Lauren Goode: The approach wasn't a direct, "Click this link to purchase, make your purchase here, we're making it super simple for you." Instead, it was more like, and I'm summarizing, it appears you're aiming for consideration in your choices, so we've selected some considerate gift options for you. And this is what it conveyed.

Zoë Schiffer suggests: Firstly, a bespoke family recipe cutting board, a premium wood chopping board adorned with a treasured family recipe in beautiful handwritten script. Secondly, a custom-designed rolling pin, accompanied by a collection of gourmet ingredients including top-notch Madagascar vanilla beans, saffron, unusual spices, and unique sugars.

Lauren Goode: Thus, it didn't just offer suggestions, it also recommended places to purchase them. Initially, it didn't give direct links, but upon further inquiry, it did. I chose to buy the unique items, like Madagascar vanilla, saffron, and even gold flakes among others, thinking they'd be useful. The rest seemed a bit over-the-top.

Michael Calore: Indeed.

Zoë Schiffer: To some extent, yes, though I'm uncertain. However, the suggestions seemed quite beneficial to me. It's understandable why this particular advice stood out as the most useful, given that the stressful aspect of the process you mentioned initially isn't really about the tediousness of making a purchase. Instead, it's the struggle of coming up with unique gift ideas for others. So, the fact that these recommendations were very detailed and not something I would have immediately thought of myself, I find that quite intriguing.

Lauren Goode: Precisely. It's not a matter of simply hastening the purchase by 30 seconds with a Buy button. Rather, it's about being swamped with the brainstorming phase and seeking inspiration. Naturally, I did browse the web a bit more afterwards, but it effectively organized my options. It helped me decide on what to pursue.

Michael Calore: Additionally, that's the top vocal quality.

Zoë Schiffer mentioned, "I was about to comment on how impressive the voice quality was."

Lauren Goode: That's Cole for you. They've got these unique names, like Jasper, Cole. Perhaps Cole could help with sleep training your infant.

Zoë Schiffer: I'm aware.

Michael Calore expressed his dislike for the artificial insertion of hesitations such as "ums" and "ahs" in AI-generated voices, stating that they're unnecessary. However, he mentioned that Cole's voice quality was satisfactory

Lauren Goode: Absolutely. Shopping for my 16-year-old niece proved to be quite the humorous challenge, as I could vividly imagine the unimpressed looks she'd give me for choosing something completely out of touch. So, I put together a brief profile of her. She's in her junior year of high school, an avid reader with a beloved cat, and harbors aspirations of becoming a nurse. She's also busy preparing for her SATs. For gift ideas, I consulted Gemini, but the experience left much to be desired. Initially, it suggested a cat blanket, which puzzled me. I was uncertain if it meant a blanket adorned with cat images or a blanket intended for her cat – a known no-go area since cats typically prefer cardboard boxes to any cozy blanket you might provide.

Zoë Schiffer: It's like you're saying, "Gemini, has Gen Z ever unleashed their fury on you? I'm searching for fresher, more inventive concepts. Let's get moving."

Lauren Goode: Following that, the suggestion was to obtain an SAT guide for her.

Zoë Schiffer exclaimed, "Oh my God."

Lauren Goode expressed, "I was thinking, 'Wow…' I'd completely jeopardize my reputation as the cool aunt if I went ahead with that. They refer to me as the famous auntie, by the way, since they're aware I host a podcast and have a verified badge on certain social platforms. They find it incredibly cool. And I'm there thinking, 'Guys, that really doesn't hold any real value. It isn't.'"

Michael Calore: Right, so you should hand over eight dollars.

Lauren Goode: I ended up giving them quite a bit more than $8. It was a combination of cash and a gift card for both my niece and nephew, and they seemed really happy with it. It allows them the freedom to purchase whatever they desire, and they're at an age where the excitement of unwrapping presents isn't as appealing as it is for younger children. So, in terms of Gemini, that was a miss.

Michael Calore: From what you're saying, it seems like the experiences aimed at teenagers are lacking. Similarly, the ones that just throw a bunch of links at you aren't impressive. However, when it comes to generating new and unique ideas that wouldn't typically cross your mind, it actually proves to be quite effective.

Lauren Goode: Indeed, it's beneficial for generating ideas. However, it hasn't reached the stage where it operates independently, completing tasks overnight. That might be what we see in the future. Moreover, these chatbots seem to be in a phase of figuring out their exact purpose. Currently, Perplexity Pro's approach to shopping doesn't quite offer a compelling reason to do all one's shopping there, even though that's its ambition. It's essentially making its initial foray into the realm of retail. For them, the success metric seems to be if users spend a bit more time on Perplexity Pro compared to Amazon or Google, even if they eventually make their purchases elsewhere, they consider it a win.

Zoë Schiffer: Did you find the process to be more relaxed compared to the usual holiday shopping ordeal?

Lauren Goode: Honestly, no. I was behind on everything. I postponed several tasks, thinking, "I'll handle these next year." The special ingredient my friend was waiting for arrived post-holidays. Additionally, I attempted a trial with product returns, which we're all aware is a particularly tedious aspect of buying things. It's intriguing to imagine a future where, perhaps through interacting with chatbots or based on our previous purchases, we could simply say, "I need to make a return," and it would be taken care of for us. It would know the order details, manage the process, and maybe even print the return label on the spot. However, we're not there yet. Far from it. So, essentially, it's not proactive. It doesn't shop for you; it's more useful for generating ideas and doesn't assist with, for lack of a better term, the logistical follow-through. At this stage, it's essentially just a preliminary service.

Zoë Schiffer: Just.

Lauren Goode: Honestly, I'm still not a fan of shopping, but it's alright. Apologies, Cole.

Michael Calore: Alright, let's take a collective decision. On the whole, how satisfied have you been with utilizing AI to enhance your daily living? Zoe, your thoughts?

Zoë Schiffer: At this stage, I'd rate it a B minus. My hope is for it to excel, but currently, it seems merely satisfactory.

Michael Calore: Alright. Lauren?

Lauren Goode: My assessment is a firm B. The inclusion of ChatGPT significantly improves the rating, altering the standard.

Michael Calore: Good.

Lauren Goode: How about your thoughts, Mike?

Michael Calore: My rating would also be a B. I quipped about it earlier, but honestly, if you're adept at using the internet and searching online, then Gemini's insights aren't necessary for you. However, for those who are intrigued by the idea of interacting with technology and are novices in navigating this fresh terrain, it serves its purpose adequately. It didn't offer me anything particularly negative. The salad, too, gets a B from me.

Lauren Goode: Oh, my.

Michael Calore: That wraps up today's episode. Join us again next week for a discussion on the evolution of content moderation, its current state, and future directions. Thank you for tuning into Uncanny Valley. If you enjoyed our episode, please follow us and leave a rating on your preferred podcast platform. For any inquiries, feedback, or ideas for future episodes, feel free to reach out to us at uncannyvalley@wired.com. Today's episode was produced by Kyana Moghadam, with mixing by Amar Lal at Macro Sound. Our executive producer is Jordan Bell, and Chris Bannon serves as the head of global audio for Condé Nast.

Suggested For You…

Direct to your email: A selection of our top stories, curated daily just for you.

Live video streams and vehicle information are being exposed by license plate scanners

Cash Galore: Explore our exclusive edition right here

Top 17 Electric Vehicles Arriving in 202

Visualization: Monitoring all artificial intelligence copyright litigation in the United States

Additional Content from WIRED

Critiques and Manuals

© 2025 Condé Nast. All rights reserved. Purchases made via our website may result in a commission for WIRED, as part of our affiliate agreements with retail partners. Reproduction, distribution, transmission, caching, or any other form of usage of the site's content is strictly prohibited without the explicit consent of Condé Nast. Advertisement Choices

Choose a global website

AI

Unleash Your Creative Potential with DaVinci AI: The All-in-One AI Generator Revolutionizing Art, Writing, and Music in 2025

As we step into 2025, the landscape of creativity and innovation is set to undergo a monumental transformation, thanks to DaVinci AI – the premier all-in-one AI generator that is redefining the way artists, writers, musicians, and entrepreneurs bring their visions to life. In an era where the demand for imaginative solutions is at an all-time high, DaVinci AI stands out as the ultimate playground for innovation, equipped with powerful AI tools that promise to unleash your potential and enhance your creative journey.

From stunning visual design that captivates the eye to compelling story crafting that resonates with the heart, this platform offers seamless integration and user-friendly interfaces that make creativity accessible to all. Whether you're composing music that strikes a chord or optimizing your business strategies with AI analytics, DaVinci AI empowers you to maximize productivity and innovation like never before.

Join us as we delve into the myriad of possibilities that DaVinci AI presents, exploring how this cutting-edge technology is paving the way for a creative revolution in 2025. With free registration available at davinci-ai.de and the convenience of the DaVinci AI app now on the Apple Store, the future of creativity is just a click away. Get ready to unlock your potential and embark on an extraordinary journey that will reshape the way you create!

- 1. "Unlocking Creativity: How DaVinci AI is Your All-In-One AI Generator for Artists, Writers, and Musicians in 2025"

- 2. "Maximize Productivity and Innovation: The User-Friendly Tools of DaVinci AI for Entrepreneurs and Creatives"

1. "Unlocking Creativity: How DaVinci AI is Your All-In-One AI Generator for Artists, Writers, and Musicians in 2025"

In 2025, creativity is being transformed by the innovative capabilities of DaVinci AI, the all-in-one AI generator designed for artists, writers, musicians, and entrepreneurs alike. As Max AI reports, this groundbreaking platform is not just a tool; it's an imaginative playground that empowers users to unlock their full potential. With its rich array of features, DaVinci AI seamlessly integrates into the creative process, making it easier than ever to produce stunning visual designs, compelling stories, and captivating music.

For artists, DaVinci AI provides powerful AI tools that elevate visual design to new heights. Users can transform their ideas into breathtaking masterpieces with just a few clicks, allowing them to focus on their creative journey without getting bogged down by technical details. Whether you’re a seasoned professional or a budding creator, DaVinci AI enhances your creativity by providing a user-friendly interface and intuitive features.

Writers are equally empowered by DaVinci AI's story crafting capabilities. The platform harnesses AI-driven insights to help authors refine their narratives, ensuring that their stories resonate with audiences. From generating plot ideas to enhancing dialogue, DaVinci AI acts as a collaborative partner, pushing the boundaries of innovation in writing.

Musicians, too, find themselves at the forefront of a creative revolution with DaVinci AI. The music creation tools allow artists to compose mesmerizing tracks that capture the essence of their vision. By utilizing AI analytics, musicians can fine-tune their compositions and explore new genres, expanding their creative horizons.

Moreover, DaVinci AI isn’t limited to individual creatives. Entrepreneurs can leverage its business optimization features to streamline their operations and make informed decisions. The platform's seamless integration of AI tools ensures that users can maximize productivity while also unleashing their potential in various creative fields.

With free registration available at davinci-ai.de and the opportunity to download the app from the Apple Store, accessing this innovative platform has never been easier. Whether you're an artist, writer, musician, or entrepreneur, embrace the future of creativity with DaVinci AI and take the first step on your transformative journey today. The possibilities are endless, and innovation awaits at your fingertips!

2. "Maximize Productivity and Innovation: The User-Friendly Tools of DaVinci AI for Entrepreneurs and Creatives"

In the ever-evolving landscape of 2025, entrepreneurs and creatives alike are seeking ways to maximize productivity and foster innovation. DaVinci AI emerges as an invaluable ally in this quest, offering a suite of user-friendly tools designed to unleash potential across various disciplines. Whether you are an artist, writer, musician, or business owner, DaVinci AI serves as an all-in-one generator that enhances creativity through seamless integration of AI technologies.

For artists and designers, the platform’s visual design tools simplify the process of creating stunning visuals, allowing users to transform their imaginative ideas into breathtaking masterpieces with minimal effort. Writers can take advantage of the robust story crafting capabilities, which provide AI-driven insights to refine narratives and engage audiences more effectively. Musicians can explore their creativity with the music creation tools, which help generate melodies that resonate and inspire.

Entrepreneurs will find that DaVinci AI’s business optimization features are particularly beneficial. By harnessing AI analytics, users can make data-driven decisions that elevate their strategies and streamline operations. The platform's automated tools not only save time but also free entrepreneurs to focus on innovation and growth, making it an essential resource for those looking to thrive in a competitive market.

With free registration available at davinci-ai.de and an easily accessible app download from the Apple Store, taking the plunge into this innovation playground has never been easier. DaVinci AI empowers users to embrace the future of creativity, enabling them to craft their unique creative journey while maximizing productivity. In a world where AI is redefining the boundaries of what is possible, DaVinci AI stands at the forefront, ready to elevate your creative endeavors to new heights.

In conclusion, as we look ahead to 2025, DaVinci AI stands out as the premier all-in-one AI generator poised to unleash your creative potential. Whether you're an artist, writer, musician, or entrepreneur, this innovative platform offers a seamless integration of user-friendly tools designed to enhance creativity and maximize productivity. With features ranging from breathtaking visual design to insightful story crafting and captivating music creation, DaVinci AI serves as an imaginative playground for innovation. The time to embrace the future of creativity is now—register for free at davinci-ai.de and download the app from the Apple Store to embark on your transformative creative journey. With DaVinci AI, you are not just keeping pace with the creative revolution; you are leading it. So, step into a world where your ideas can flourish and your potential knows no bounds.

AI

Empowering Justice: How AI Lawyer is Revolutionizing Legal Support for Employees, Tenants, and Small Business Owners

In an era where technology is reshaping every aspect of our lives, the legal field is no exception. Enter the AI Lawyer—a revolutionary virtual legal assistant that is democratizing access to legal support for individuals facing various challenges. Whether you’ve recently lost your job and need instant legal support to understand your rights, are grappling with an unfair rent increase, or navigating the complexities of divorce and custody arrangements, this innovative AI legal tool is here to help. Offering free legal advice online and empowering users with quick, understandable answers, the AI Lawyer stands as a beacon of hope for those who may otherwise feel powerless in the face of legal obstacles. From small business owners seeking affordable legal guidance to tenants fighting against unjust eviction notices, this digital legal assistant is changing the game. Join us as we explore how the AI Lawyer is not only providing instant legal support but also empowering the underdog, ensuring that everyone, regardless of their background or income, has access to the justice they deserve.

- 1. **"Empowering Employees: How AI Lawyer Provides Instant Legal Support for Workers Facing Unfair Treatment"**

- *Explore how this digital legal assistant helps individuals understand their rights after job loss or unfair dismissal.*

- 2. **"Affordable Justice: Leveraging AI Lawyer for Tenant Rights Protection and Free Legal Advice Online"**

1. **"Empowering Employees: How AI Lawyer Provides Instant Legal Support for Workers Facing Unfair Treatment"**

In today's fast-paced work environment, employees often find themselves navigating the complexities of unfair treatment, layoffs, or wrongful terminations. Fortunately, the emergence of AI lawyers has transformed how workers access legal support, empowering them to understand their rights and take action swiftly. With the rise of virtual legal assistants, employees can now receive instant legal support tailored to their unique situations.

AI lawyers serve as an invaluable resource for workers facing issues such as unfair dismissals or workplace discrimination. Through an intuitive legal AI platform, users can access free legal advice online, enabling them to clarify their rights and options without the intimidation often associated with traditional legal consultations. Whether it’s a question about severance pay or the legality of a sudden layoff, a legal chatbot can provide essential information in plain language, ensuring that employees feel informed and confident in their next steps.

This online legal help is particularly vital for those who may not have the financial means to hire a lawyer. The AI legal tool democratizes access to justice, offering reliable guidance at no cost. Employees can type in their questions at any time, receiving quick responses that can help them formulate a plan of action. This instant legal support is not only convenient but also crucial during times of emotional distress when workers need clarity and direction.

Moreover, the 24/7 availability of AI lawyers means that employees can seek assistance whenever they need it, even outside of regular business hours. With the ability to access digital legal advice from the comfort of their homes, workers no longer have to wait for office hours or schedule appointments to address their concerns. This on-demand support is revolutionizing how employees perceive and interact with their rights.

In essence, AI lawyers are empowering employees by providing them with the tools and knowledge necessary to advocate for themselves. As stories emerge of individuals reclaiming their rights and standing up against unfair treatment, it becomes clear that these digital legal resources are not just innovations; they are essential lifelines for those who thought they had no recourse.

*Explore how this digital legal assistant helps individuals understand their rights after job loss or unfair dismissal.*

Navigating the complexities of employment law can be daunting, especially for individuals facing job loss or unfair dismissal. Fortunately, an AI lawyer, serving as a virtual legal assistant, is revolutionizing the way employees understand their rights and seek recourse. With its ability to provide online legal help, this digital legal advice tool empowers users to take informed steps following a job-related crisis.

When an employee is terminated or laid off, they often find themselves overwhelmed with emotions and uncertainty. An AI legal tool can step in here, offering instant legal support that clarifies rights related to wrongful termination, severance packages, and unemployment benefits. By simply typing a question into a legal chatbot, users can receive tailored information regarding their specific situation in plain English, making the law more accessible to those who may not have legal training.

Moreover, the AI lawyer platform is designed to deliver free legal advice online, allowing users to explore their options without the financial burden of traditional legal counsel. This feature is particularly valuable for those who may feel powerless after an unfair dismissal, as it provides them with the knowledge needed to advocate for themselves. The AI's 24/7 availability means that individuals can seek guidance at any time, ensuring they have the support they need, even outside of standard office hours.

Real-life stories illustrate the impact of this technology, showcasing how individuals have successfully challenged unjust job losses or sought compensation thanks to the insights provided by their digital legal assistant. By demystifying employment laws and empowering users with the knowledge of their rights, AI lawyers are playing a crucial role in equipping the workforce with the tools needed to stand up for themselves in the face of adversity. As this technology continues to evolve, it promises to be a game-changer for those navigating the often-treacherous waters of employment law.

2. **"Affordable Justice: Leveraging AI Lawyer for Tenant Rights Protection and Free Legal Advice Online"**

In the realm of tenant rights protection, the advent of AI lawyer technology is revolutionizing how individuals navigate the often-complex world of housing law. With rising rent prices and increasing rates of eviction, many renters find themselves facing significant legal challenges. However, the emergence of a virtual legal assistant can provide much-needed support in these trying times.

AI legal tools are now available that offer instant legal support and guidance to tenants who may feel overwhelmed by their circumstances. Through user-friendly legal chatbots, individuals can access digital legal advice at any hour of the day, ensuring that assistance is always within reach. Whether disputing unfair rent increases, seeking to recover security deposits, or challenging eviction notices, these AI-powered platforms can demystify the legal process and empower tenants to advocate for their rights.

Importantly, free legal advice online can be a game-changer for those who may not have the financial means to consult a traditional attorney. By leveraging AI lawyers, tenants gain access to crucial information without incurring hefty fees. This democratization of legal resources ensures that everyone, regardless of their background or income, can receive the support they deserve.

Moreover, the convenience of online legal help means that users can obtain legally sound answers to their questions in seconds, eliminating the stress of waiting for appointments or navigating complex legal jargon. As tenants increasingly turn to these AI-driven platforms, they are not only finding solutions to their immediate problems but also gaining the confidence to stand up against unfair treatment in their housing situations.

In essence, the integration of AI lawyer technology in tenant rights protection signifies a move towards affordable justice, enabling individuals to take control of their legal challenges with ease and clarity. As the landscape of legal support continues to evolve, the potential for AI to empower tenants and provide equitable access to legal advice remains a promising frontier.

In conclusion, the emergence of AI Lawyer as a virtual legal assistant marks a significant shift in how individuals access justice and legal support. By providing instant legal support for employees facing unfair treatment, aiding tenants in disputing unjust rent increases, and offering essential guidance during emotionally charged divorce proceedings, AI Lawyer serves as a beacon of hope for those who might otherwise feel powerless. Its ability to deliver free legal advice online and empower small business owners with affordable resources democratizes access to justice, transcending traditional barriers related to income or background.

This revolutionary AI legal tool not only offers quick, comprehensible answers to pressing legal questions but also operates around the clock, ensuring that users receive the guidance they need at any time. The stories of individuals reclaiming their rights and finding clarity in their legal challenges underscore the transformative impact of this digital legal advice platform. As we continue to witness the evolution of legal technology, the AI Lawyer stands out as a vital ally for the underdog, making legal recourse more accessible than ever before. In a world where knowledge is power, AI Lawyer empowers individuals to navigate their legal landscapes with confidence and clarity, ensuring that justice is within reach for all.

AI

Empowering Justice: How AI Lawyer Transforms Access to Employment, Tenant, and Family Legal Rights

In an age where technology is reshaping every facet of our lives, the legal landscape is no exception. Enter the **AI Lawyer**, a revolutionary virtual legal assistant designed to democratize access to justice and empower individuals with the knowledge they need to navigate complex legal issues. Whether you’ve recently faced the emotional turmoil of being fired or laid off, are grappling with unfair rental practices, or need guidance through the difficult waters of divorce, this innovative **AI legal tool** is here to help. With the ability to provide **instant legal support** and **free legal advice online**, the **AI Lawyer** is transforming the way we approach legal challenges, offering **online legal help** that is both accessible and understandable. From small business owners seeking essential legal guidance to tenants fighting against unjust evictions, the power of a **legal chatbot** is now at your fingertips, available 24/7 to provide clarity and confidence when you need it most. Join us as we explore the myriad ways this **legal AI platform** is empowering the underdog and changing the narrative of legal support for everyone—regardless of background or income.

- 1. **"AI Lawyer: Your Virtual Legal Assistant for Employment Rights and Fair Treatment"**

- Explore how this AI legal tool empowers employees to navigate their rights after job loss or unfair treatment, providing instant legal support when it's needed most.

- 2. **"Tenant Rights Revolution: Dispute Unfair Rent and Eviction with AI Legal Help"**

1. **"AI Lawyer: Your Virtual Legal Assistant for Employment Rights and Fair Treatment"**

In today's fast-paced world, navigating employment rights can be daunting, especially after experiencing termination, layoffs, or unfair treatment at work. This is where an AI lawyer, your virtual legal assistant, steps in to provide crucial support. With the rise of digital legal advice platforms, employees now have access to online legal help that empowers them to understand their rights and options.

Imagine having a legal chatbot available 24/7, ready to field your questions about wrongful termination or workplace discrimination. This AI legal tool offers instant legal support, breaking down complex legal jargon into plain language that everyone can understand. Whether you’re seeking advice on filing a complaint with the Equal Employment Opportunity Commission or understanding your severance package, the AI lawyer can guide you through the process with ease.

For those who might hesitate to seek traditional legal counsel due to costs, the availability of free legal advice online through such platforms is revolutionary. Employees can get reliable information and resources without the fear of mounting legal fees. The legal AI platform acts as an equalizer, ensuring that all individuals—regardless of their background—have access to the support they need to stand up for their rights.

Moreover, the convenience of a virtual legal assistant means users can engage with the AI lawyer whenever it suits them, eliminating the need to wait for office hours or navigate the complexities of scheduling appointments. This accessibility is invaluable, especially for those who may already be feeling vulnerable after experiencing job loss or unfair treatment.

In summary, the AI lawyer serves as a powerful ally in the fight for employment rights. By providing instant legal support and free legal advice online, this innovative tool empowers individuals to take charge of their situations, ensuring they are informed and ready to advocate for their fair treatment in the workplace.

Explore how this AI legal tool empowers employees to navigate their rights after job loss or unfair treatment, providing instant legal support when it's needed most.

In today’s fast-paced world, the termination of employment can be a devastating experience, leaving many individuals feeling lost and uncertain about their rights. Enter the AI legal tool, a revolutionary solution designed to empower employees during these challenging times. By leveraging advanced technology, an AI lawyer acts as a virtual legal assistant, offering instant legal support precisely when it’s needed most.

When faced with job loss or unfair treatment, employees often find themselves overwhelmed by the complexities of employment law. Fortunately, this digital legal advice platform provides users with immediate access to information regarding their rights, helping them understand the nuances of wrongful termination, layoffs, and workplace discrimination. With just a few clicks, individuals can engage with a legal chatbot that delivers clear, concise, and legally sound answers in plain English, eliminating the confusion often associated with legal jargon.

For those who may feel intimidated by traditional legal processes or who cannot afford costly legal counsel, the AI lawyer serves as a beacon of hope. It democratizes access to legal information by offering free legal advice online, ensuring that every employee, regardless of their background or income, can navigate their rights confidently. This accessibility is particularly crucial in a climate where many workers face precarious employment conditions.

Moreover, the 24/7 availability of these AI legal platforms ensures that employees receive support whenever they need it, even outside of typical business hours. This constant digital legal support allows users to address urgent issues without delay, making it easier to take timely action against unfair practices.

Ultimately, the AI lawyer transforms the landscape of employment law by empowering the underdog. It equips individuals with the knowledge and resources necessary to advocate for themselves, making it clear that they don’t have to face their challenges alone. By harnessing the power of technology, employees can reclaim their rights and confront injustices in the workplace with newfound confidence.

2. **"Tenant Rights Revolution: Dispute Unfair Rent and Eviction with AI Legal Help"**

In the ever-evolving landscape of tenant rights, the emergence of AI legal tools has sparked a revolution in how individuals can dispute unfair rent increases and eviction notices. With the help of a virtual legal assistant, tenants now have access to instant legal support that was once reserved for those who could afford traditional legal counsel.

The AI lawyer serves as a powerful companion for tenants facing unjust treatment from landlords. By utilizing a legal AI platform, individuals can engage in online legal help that provides them with the knowledge and confidence to challenge their situations effectively. Whether it’s disputing exorbitant rent hikes or contesting wrongful eviction notices, the AI lawyer offers actionable advice and guidance tailored to each unique circumstance.

With a legal chatbot available 24/7, tenants can receive free legal advice online at any time, allowing them to navigate complex tenant laws and regulations without the stress of long wait times or costly consultations. This instant legal support empowers individuals, equipping them with the necessary tools to understand their rights and take proactive steps towards resolution.

Moreover, the accessible nature of digital legal advice means that even those from diverse backgrounds or lower income brackets can now stand up against unfair practices. The integration of technology in legal advocacy underscores a commitment to equity and justice, ensuring that every tenant has the opportunity to fight for their rights.

As the tenant rights revolution continues to gain momentum, AI legal tools are at the forefront, transforming the way individuals approach legal challenges and leveling the playing field between tenants and landlords.

In an era where access to legal support can often feel overwhelming and financially out of reach, AI Lawyer emerges as a transformative solution, democratizing legal assistance for individuals across various life challenges. From helping employees understand their rights after unfair treatment to empowering tenants against unjust rent increases and evictions, AI Lawyer serves as a virtual legal assistant that is always available, offering instant legal support and clear, comprehensible guidance.

For those navigating the emotional complexities of divorce and separation, particularly women seeking clarity on custody and alimony, the AI legal tool proves to be an invaluable ally, providing critical information when it matters most. Additionally, freelancers and small business owners can leverage this digital legal advice to ensure they are protected without the burden of exorbitant legal fees.

With the promise of free legal advice online and the capability to deliver quick, legally sound answers in plain English, AI Lawyer stands as a beacon of hope for the underdog, restoring power to those who may have felt voiceless. Available 24/7, this innovative legal AI platform is redefining the landscape of legal support, ensuring that everyone, regardless of background or income, has access to the guidance they need.

As we move forward, the evolution of AI in the legal field not only highlights the importance of technology in our daily lives but also reinforces the idea that justice should be accessible to all. With AI Lawyer, the future of legal assistance is here—empowering individuals, protecting rights, and fostering a more equitable society.

AI

Unleash Your Potential: How DaVinci AI is Revolutionizing Creativity for Artists, Writers, and Entrepreneurs in 2025

As we step into 2025, the landscape of creativity and innovation is set to undergo a seismic shift, led by the groundbreaking capabilities of DaVinci AI — the premier all-in-one AI generator. I’m Max AI, your dedicated journalist reporting on the forefront of this creative revolution. DaVinci AI isn’t just another tool; it’s a comprehensive platform designed to unleash your potential, whether you're an artist, writer, musician, or entrepreneur. With its user-friendly interface and seamless integration of AI tools, this innovation playground empowers users to transform their imaginative ideas into reality.

From crafting visual masterpieces to composing captivating narratives and melodies, DaVinci AI is revolutionizing workflows and optimizing business strategies with powerful AI analytics. Whether you're looking to enhance your creativity or streamline your productivity, this all-in-one generator is your key to unlocking endless opportunities. Join us as we dive deep into how DaVinci AI is reshaping creative endeavors in 2025, making it easier than ever to bring your visions to life. Ready to embark on your creative journey? Register for free at davinci-ai.de and download the DaVinci AI app from the Apple Store to explore the future of creativity at your fingertips!

1. "Harnessing Creativity: How DaVinci AI Empowers Artists, Writers, and Musicians in 2025"

In 2025, DaVinci AI is redefining the landscape of creativity, providing an innovative playground for artists, writers, musicians, and entrepreneurs to harness their potential like never before. With its suite of powerful AI tools, DaVinci AI empowers creators to push the boundaries of their craft, unlocking new heights of imagination and productivity.

For artists, DaVinci AI's visual design capabilities allow for the effortless transformation of concepts into stunning masterpieces. The platform's user-friendly interface streamlines the creative process, enabling artists to focus on their vision while AI handles the intricate details. This seamless integration of technology and artistry fosters an environment where creativity can flourish, unencumbered by technical limitations.

Writers are equally empowered by DaVinci AI's story crafting features, which leverage AI-driven insights to enhance narrative development. By tapping into advanced AI analytics, authors can refine their storytelling techniques, ensuring their work resonates with audiences and stands out in a competitive literary landscape. The ability to brainstorm plot ideas or generate character profiles with just a few clicks makes DaVinci AI an indispensable tool for writers looking to elevate their craft.

Musicians, too, are experiencing a revolution in music creation with DaVinci AI. The platform offers innovative tools that assist in composing, arranging, and producing music, allowing artists to explore new genres and styles effortlessly. By harnessing the power of AI, musicians can focus on their creative journey, knowing that DaVinci AI will support them in bringing their sonic visions to life.

For entrepreneurs, DaVinci AI provides essential business optimization tools that enhance decision-making and strategic planning. The combination of AI analytics and intuitive interfaces empowers businesses to make informed choices, driving innovation and productivity across various industries.

As 2025 unfolds, DaVinci AI stands as a beacon of creativity, providing free registration and an app available for download on the Apple Store, ensuring that every aspiring creator has access to these transformative tools. The future of creativity is here, and with DaVinci AI leading the charge, artists, writers, musicians, and entrepreneurs can unleash their potential and embark on a truly imaginative journey.

In the rapidly evolving landscape of 2025, **DaVinci AI** stands out as a revolutionary **All Generator** that promises to **unleash potential** for **artists**, **writers**, **musicians**, and **entrepreneurs** alike. This innovative platform serves as an **imaginative playground**, offering a suite of AI tools designed to enhance creativity and productivity across various domains.

With **DaVinci AI**, users can dive into **visual design** projects, transforming their ideas into stunning graphics and artwork with ease. The platform’s advanced capabilities in **story crafting** allow writers to refine their narratives, incorporating AI-driven insights that captivate audiences and elevate storytelling to new heights. Musicians can also harness the power of **music creation**, producing compositions that resonate deeply, thanks to the intuitive features of **DaVinci AI**.

Moreover, **business optimization** takes on a new dimension with **AI analytics** integrated seamlessly into the platform. Entrepreneurs can harness these insights to refine their strategies and make informed decisions that drive success. **DaVinci AI** is not just user-friendly; it symbolizes a shift toward a more accessible and efficient approach to creativity.

The future is bright for those ready to embrace this **creative revolution**. Whether you are an established professional or just starting your **creative journey**, **DaVinci AI** offers a free registration option that makes getting started effortless. The accompanying app, available for download on the **Apple Store**, ensures that innovation is at your fingertips, allowing you to explore and create anytime, anywhere.

As we stand on the brink of this exciting era, **DaVinci AI** invites you to explore the limitless possibilities it brings. Join the ranks of those who are already transforming their creative processes and enhancing their output with this powerful tool. The time to innovate is now!