AI at a Crossroads: The Impact of the U.S. Presidential Election on Artificial Intelligence Regulation and Innovation

To view this article again, go to My Profile and then select Saved Stories.

A Victory for Trump Might Accelerate Risky AI Advances

Should Donald Trump secure the presidency in the upcoming November election, the safeguards surrounding the advancement of artificial intelligence could be weakened, amidst escalating concerns over the risks posed by flawed AI systems.

If Trump were to be reelected for another term, it could significantly alter and potentially harm initiatives aimed at safeguarding US citizens from the various risks associated with flawed artificial intelligence. This includes issues such as the spread of false information, bias, and the corruption of algorithms in technologies like self-driving cars.

Under an executive order issued by President Joe Biden in October 2023, the federal government has started to supervise and provide guidance to AI firms. However, Trump has promised to cancel this directive, with the Republican Party’s stance being that it restricts AI innovation and forces extreme leftist ideologies onto AI advancement.

Trump's pledge has excited detractors of the executive order, whom they view as unlawful, perilous, and a blockage in America's technological competition with China. Among these detractors are several of Trump's key supporters, ranging from X CEO Elon Musk and venture capitalist Marc Andreessen to Republican congress members and almost twenty GOP state attorneys general. Trump's vice-presidential candidate, Ohio Senator JD Vance, is firmly against AI regulation.

"Jacob Helberg, a technology executive and advocate for artificial intelligence, known as the 'Silicon Valley’s liaison to Trump,' mentions that Republicans are cautious about hastily imposing too many regulations on this sector."

However, technology and cybersecurity specialists caution that removing the Executive Order's protections could compromise the reliability of artificial intelligence systems, which are gradually becoming a part of every facet of life in the United States, including areas such as transportation, healthcare, job markets, and monitoring activities.

The forthcoming presidential race could play a pivotal role in deciding if AI transforms into an unmatched instrument of efficiency or an unmanageable force of disorder.

Supervision and Guidance Together

The directive issued by Biden encompasses a range of applications for AI, including enhancing health care for veterans and establishing protective measures for its use in pharmaceutical research. However, the majority of the political debate surrounding the executive order revolves around two specific parts related to managing cybersecurity threats and the effects of AI on physical safety.

A stipulation mandates that proprietors of potent AI technologies must disclose to authorities the methods employed in training these models and the measures taken to safeguard them against manipulation and pilferage. This includes sharing outcomes from "red-team tests," which are intended to expose weaknesses in AI systems through orchestrated assault simulations. Additionally, another directive instructs the Commerce Department's National Institute of Standards and Technology (NIST) to create guidelines aiding firms in crafting AI models that are resilient to cyber threats and devoid of discriminatory biases.

Efforts on these initiatives are progressing steadily. The authorities have suggested that AI creators should submit reports every three months, while NIST has made public various AI advisory materials covering topics such as risk management, the development of secure software, the implementation of watermarks in synthetic content, and measures to prevent the misuse of models. Furthermore, NIST has initiated several programs aimed at encouraging the testing of models.

Proponents argue that these measures are crucial for ensuring fundamental regulatory supervision over the swiftly growing artificial intelligence sector and encouraging developers to enhance security measures. However, opponents from the conservative spectrum view the mandate for reporting as an unlawful intrusion by the government, which they fear will stifle innovation in AI and risk the confidentiality of developers' proprietary techniques. Additionally, they criticize the guidelines provided by NIST as a strategy by the left to introduce extreme progressive ideas concerning misinformation and prejudice into AI, which they believe equates to the suppression of conservative voices.

During a gathering in Cedar Rapids, Iowa, in December, Trump criticized Biden's Executive Order, claiming without proof that the Biden administration had previously employed artificial intelligence for malicious reasons.

"In my next term," he declared, "I intend to revoke Biden's executive order on artificial intelligence and immediately prohibit AI from suppressing the expression of Americans from the very first day."

Investigative Overreach or Necessary Precaution?

The initiative led by Biden to gather data on corporations' creation, examination, and security measures for their artificial intelligence systems ignited immediate controversy among lawmakers as soon as it was introduced.

Republican members of Congress highlighted that President Biden based the new mandate on the 1950 Defense Production Act, a law from wartime that allows the government to control activities in the private sector to guarantee a steady flow of goods and services. GOP representatives criticized Biden's action as unwarranted, against the law, and superfluous.

Critics from the conservative side have criticized the mandate for reporting as an unnecessary strain on businesses. During a March session she led, focusing on "White House overreach on AI," Representative Nancy Mace expressed concerns that the requirement "might deter potential innovators and hinder further advancements similar to ChatGPT."

Helberg argues that a demanding obligation would favor existing corporations while disadvantaging new ventures. He further mentions that critics from Silicon Valley are apprehensive that these obligations might pave the way for a regulatory system where developers are required to obtain official approval before experimenting with models.

Steve DelBianco, the chief executive officer of the right-leaning technology organization NetChoice, expresses concern that the mandate to disclose outcomes from red-team assessments essentially acts as indirect censorship. This is because the government will be searching for issues such as bias and misinformation. "I'm deeply troubled by the prospect of a liberal administration…whose red-team evaluations could lead AI to limit its output to avoid setting off these alarms," he states.

Conservative voices contend that regulatory measures which hinder the advancement of artificial intelligence could significantly disadvantage the United States in its tech rivalry against China.

"Helberg notes that their approach is highly assertive, with the pursuit of AI superiority being a fundamental goal in their military strategy. He also mentions that the difference in capabilities between us and the Chinese is narrowing each year."

"Socially Conscious" Safety Protocols

Incorporating societal injuries into its AI safety regulations, NIST has sparked anger among conservatives, igniting a new battle in the ongoing cultural conflict over regulating content and freedom of expression.

Republicans criticize the NIST guidelines, labeling them as indirect government suppression of speech. Senator Ted Cruz strongly criticized NIST's artificial intelligence 'safety' protocols, which he believes are an attempt by the Biden administration to regulate speech under the guise of preventing vague societal damages. NetChoice has cautioned NIST against overstepping its bounds with semi-regulatory measures that disrupt the proper equilibrium between openness and the freedom of expression.

Numerous conservatives outright reject the notion that AI can continue to cause societal issues and argue that it should be intentionally created to avoid such problems.

"Helberg argues that the proposed solution is addressing a non-issue, stating, 'There hasn't been significant proof of widespread problems concerning AI bias.'"

Research and inquiries consistently reveal that artificial intelligence systems exhibit prejudices that continue to fuel discrimination across various sectors such as employment, law enforcement, and medical services. Studies indicate that individuals exposed to these biases might inadvertently internalize them.

Conservatives are more concerned about AI firms going too far in their attempts to address this issue than the issue itself. "There's a clear negative relationship between how 'woke' an AI is and how useful it is," Helberg mentions, referring to a preliminary problem with Google's AI creation tool.

Republicans are calling for the National Institute of Standards and Technology (NIST) to prioritize the examination of the physical dangers posed by artificial intelligence, particularly its potential in aiding terrorists in creating biological weapons, a concern that is addressed in President Biden's executive order. Should Trump secure a victory, it's expected that his selections for appointments would shift focus away from the exploration of AI's societal impacts. Helberg has voiced frustration over the extensive research dedicated to AI bias, arguing that it overshadows the more severe risks associated with terrorism and biological warfare.

Advocating for a Minimalist Strategy

AI specialists and legislators strongly support Biden's AI security plan.

Representative Ted Lieu, the Democratic co-leader of the House's AI task force, states that these initiatives ensure that the United States continues to lead in AI advancement and safeguards its citizens against possible dangers.

A US government official involved in AI matters emphasizes the importance of reporting obligations to notify authorities about possible threats posed by the advancement of AI technologies. The official, who wished to remain unnamed to express opinions openly, highlights OpenAI's acknowledgment regarding its newest model's sporadic unwillingness to create nerve agents upon request.

The spokesperson states that the mandate for reporting is not excessively demanding. They contend that, in contrast to AI rules in the European Union and China, Biden's Executive Order embodies "a comprehensive, minimal-interference strategy that still encourages creative advancement."

Nick Reese, the inaugural director of emerging technology at the Department of Homeland Security from 2019 to 2023, disputes right-leaning arguments suggesting that the reporting mandate will endanger firms' proprietary technology. He believes it may, in fact, advantage new companies by motivating them to create AI models that are "more computationally efficient" and use less data, thus staying below the reporting limit.

Ami Fields-Meyer, a former White House technology official involved in crafting Biden's executive order, emphasizes the necessity of governmental regulation due to the significant influence of artificial intelligence.

Fields-Meyer points out, “These are firms claiming to develop the strongest technologies ever known. The primary duty of the government is to safeguard its citizens. Simply saying 'Trust us, we're handling it' doesn't really hold much weight.”

Industry specialists commend the National Institute of Standards and Technology's (NIST) security recommendations as crucial for incorporating safety measures into emerging technologies. They highlight that defective artificial intelligence (AI) models can lead to significant societal issues, such as discrimination in housing and loan services and the wrongful denial of government assistance.

Trump's initial AI directive during his first term mandated that federal AI technologies adhere to civil liberties, necessitating investigations into societal damages.

The artificial intelligence sector has generally embraced President Biden's focus on safety measures. According to a US official, there's a consensus that clearly defining these guidelines is beneficial. For emerging companies with limited personnel, "it enhances their team's ability to tackle these issues."

Reversing Biden's Executive Order would indicate a concerning message that "the US government plans to adopt a laissez-faire stance towards AI security," according to Michael Daniel, a previous advisor on cybersecurity to the president, who currently heads the Cyber Threat Alliance, a nonprofit dedicated to sharing information on cyber threats.

Regarding the rivalry with China, supporters of the Executive Order argue that implementing security regulations will enable the United States to gain an upper hand. They believe these measures will enhance the performance of American AI technologies over their Chinese counterparts and safeguard them against China's attempts at economic espionage.

Diverging Futures Ahead

Should Trump secure a victory in the upcoming White House race, anticipate a complete transformation in the government's strategy towards AI security.

Republicans are inclined towards mitigating AI-related risks through the application of "current tort and statutory laws" rather than introducing expansive new regulations on the technology, according to Helberg. They prefer to emphasize significantly on leveraging the benefits AI presents, instead of concentrating too much on minimizing risks. This approach could potentially jeopardize the reporting mandate and might also impact some of the guidelines proposed by NIST.

The mandate for reporting might encounter judicial obstacles, especially after the Supreme Court has diminished the level of respect that courts previously afforded to agencies in assessing their rules.

Resistance from the GOP might also put at risk the voluntary partnerships that NIST has formed with top companies for testing AI. "What will become of those agreements under a new government?" questions the US official.

The division regarding AI has caused concern among tech experts who fear that Trump's actions could hinder efforts to develop more secure algorithms.

"Nicol Turner Lee, who leads the Center for Technology Innovation at the Brookings Institution, points out that while AI offers great potential, it also comes with significant risks. She emphasizes the importance of the forthcoming president maintaining the safety and security of these technologies."

You May Also Enjoy …

Delivered directly to your email: A selection of the most fascinating and peculiar tales from the archives of WIRED.

Interview: Marissa Mayer Doesn't Identify as a Feminist. She Prefers Being Called a Software Enthusiast.

An AI application aided in apprehending individuals, until it underwent closer examination.

How a Thin Foam Layer Revolutionized the NFL

Event: Don't miss out on The Major Interview happening on December 3rd in San Francisco.

Additional Content from WIRED

Critiques and Manuals

© 2024 Condé Nast. All rights reserved. A percentage of revenue from products bought via our website, as part of our retail affiliate partnerships, may go to WIRED. The content on this website cannot be copied, shared, broadcasted, stored, or utilized in any form without explicit written consent from Condé Nast. Advertising Choices

Choose a global website

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

Unlocking the Future: Top Innovations in AI from DaVinci-AI to Smart Autonomous Systems

Leading the charge in AI innovation, platforms such as DaVinci-AI, AI-AllCreator, and smart autonomous systems from bot.ai-carsale.com are revolutionizing various industries. With a focus on Artificial Intelligence Machine Learning, Deep Learning Neural Networks, and Cognitive Computing, these platforms offer cutting-edge solutions in content creation, predictive analytics, big data analysis, and intelligent system design. DaVinci-AI stands out for its human-like text generation, AI-AllCreator specializes in customizable AI tools for advanced analytics, and smart autonomous systems from bot.ai-carsale.com bring AI to robotics and automation, particularly in autonomous vehicles. These advancements highlight the synergy of AI technologies, pushing boundaries in robotics, automation, intelligent systems, and beyond, setting new standards in smart technology, pattern recognition, speech recognition, and augmented intelligence.

In the rapidly evolving world of technology, Artificial Intelligence (AI) stands out as a transformative force, reshaping industries, redefining human-machine interaction, and pushing the boundaries of what's possible. At the core of AI’s revolutionary impact are its sophisticated processes — from machine learning, deep learning, and neural networks to natural language processing, robotics, and cognitive computing — that enable machines to emulate and extend human intelligence. As we delve into the realm of AI, it's crucial to spotlight the top innovations that are setting the stage for future advancements. This article embarks on an exploration of cutting-edge AI technologies, from the groundbreaking DaVinci-AI and AI-AllCreator to the emergence of smart autonomous systems, highlighting how these developments are not just reimagining but actively reconstructing the landscape of Artificial Intelligence. With an emphasis on machine learning, predictive analytics, big data, and smart technology, we'll uncover how these AI-driven solutions are revolutionizing sectors, including healthcare with improved medical diagnosis, the automotive industry through self-driving cars, and the financial world with more accurate financial forecasting. Join us as we navigate through the top innovations in AI, showcasing platforms like davinci-ai.de and ai-allcreator.com, and delve into the future of intelligent systems, where augmented intelligence, pattern recognition, speech recognition, and autonomous systems are leading the way towards a smarter, more efficient world.

1. "Exploring the Top Innovations in Artificial Intelligence: From DaVinci-AI and AI-AllCreator to Smart Autonomous Systems"

In the rapidly evolving landscape of artificial intelligence (AI), groundbreaking innovations are setting new benchmarks for what machines can achieve. Among the top advancements, DaVinci-AI, AI-AllCreator, and smart autonomous systems are reshaping industries, enhancing efficiency, and pushing the boundaries of human-machine collaboration. These developments leverage the core principles of AI, including machine learning, deep learning neural networks, natural language processing, computer vision, and robotics, to create solutions that were once the realm of science fiction.

DaVinci-AI stands out as a pivotal innovation in the AI field. This platform harnesses the power of cognitive computing and AI algorithms to mimic human thought processes, enabling machines to solve complex problems and generate creative solutions. By integrating deep learning neural networks and natural language processing, DaVinci-AI offers unprecedented capabilities in understanding and generating human-like text, making it a valuable tool for a range of applications from content creation to customer service.

AI-AllCreator takes the potential of AI a step further by focusing on the automation and customization of AI solutions across various sectors. This platform embodies the essence of artificial intelligence machine learning, providing users with the ability to tailor AI functionalities to their specific needs. Whether it's for predictive analytics, big data analysis, or intelligent systems design, AI-AllCreator empowers businesses and developers to leverage smart technology effectively and efficiently.

Smart autonomous systems represent another significant leap forward, particularly in the realms of robotics and autonomous vehicles. These systems combine sensor data with AI algorithms to navigate and interact with their surroundings autonomously. Websites such as bot.ai-carsale.com showcase how AI can revolutionize industries by providing intelligent, self-navigating systems that offer both convenience and safety. From self-driving cars to robotic assistants, these autonomous systems utilize pattern recognition, speech recognition, and augmented intelligence to perform tasks, adapt to new environments, and make decisions in real-time.

The integration of AI into autonomous systems highlights the synergy between robotics, automation, and cognitive computing. It exemplifies how AI can extend beyond digital interfaces, bringing intelligent, data-driven decision-making into the physical world. As these technologies continue to develop, they pave the way for more sophisticated applications in medical diagnosis, financial forecasting, and beyond.

The innovations of DaVinci-AI, AI-AllCreator, and smart autonomous systems epitomize the tremendous potential of artificial intelligence. By harnessing deep learning neural networks, natural language processing, and advanced AI algorithms, these platforms and technologies offer a glimpse into a future where AI not only augments human capabilities but also creates new paradigms for interaction, creativity, and problem-solving. As we continue to explore the vast possibilities of AI, these top innovations serve as beacons, guiding the way toward a smarter, more autonomous world enriched by intelligent technology.

In conclusion, the journey through the labyrinth of artificial intelligence (AI) innovations, from the pioneering DaVinci-AI and AI-AllCreator to the cutting-edge applications in smart autonomous systems, underscores the breadth and depth of AI's transformative power. The exploration of top innovations in AI reveals a future where technology surpasses the simple automation of tasks, venturing into the realm of augmenting human capabilities and making intelligent decisions. The integration of machine learning, deep learning neural networks, natural language processing, and robotics into various sectors is not just reshaping industries but is also redefining the interaction between humans and machines.

Platforms like davinci-ai.de, ai-allcreator.com, and bot.ai-carsale.com are at the forefront, demonstrating the potential of AI in driving efficiency, enhancing predictive analytics, and offering unprecedented levels of customization and interaction. The advancements in cognitive computing, data science, and intelligent systems highlight the ongoing evolution from mere data analysis to insightful, predictive decision-making capabilities. As we stand on the brink of this AI revolution, it's evident that artificial intelligence, with its vast capabilities in pattern recognition, speech recognition, and beyond, is set to revolutionize not just industries but the very fabric of society.

The implications for the future are profound, offering both exciting opportunities and challenges that need careful navigation. As AI continues to advance, ethical considerations and the impact on the job market will become increasingly important topics for discussion. Nevertheless, the potential for AI to contribute to solving some of the world's most pressing problems, from medical diagnosis to environmental conservation, is immense. The journey into AI's potential is just beginning, and its role in driving smart technology and autonomous systems promises to create a future where technology and human intelligence coalesce to unlock unimaginable possibilities.

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

Unveiling the Future of Tech: Top AI Innovations from DaVinci-AI.de to AI-AllCreator.com Transforming Industries with Machine Learning, Robotics, and Beyond

Top innovators DaVinci-AI.de and AI-AllCreator.com are propelling AI towards new heights with their pioneering work in machine learning, robotics, deep learning neural networks, natural language processing, and more. Their advancements in cognitive computing and AI algorithms are revolutionizing industries with smarter automation, predictive analytics, and pattern recognition. DaVinci-AI.de's expertise in computer vision and autonomous systems, like those from bot.ai-carsale.com, alongside AI-AllCreator.com's focus on big data and smart technology, are making AI more integrated in enhancing human intelligence for a more efficient and intelligent world.

In the rapidly evolving world of technology, Artificial Intelligence (AI) stands at the forefront, pushing the boundaries of what machines are capable of achieving. By simulating human intelligence processes such as learning, reasoning, problem-solving, perception, and decision-making, AI technologies are not just reshaping industries but also redefining our interaction with technology. From the seamless assistance provided by virtual assistants to the precision of medical diagnoses and the autonomy of self-driving cars, AI's applications are vast and varied. This article delves deep into the heart of AI's revolutionary impact, focusing on the top innovations that are setting the pace for this technological evolution. Highlighting breakthroughs from leading pioneers like DaVinci-AI.de and the cutting-edge advancements in machine learning and robotics from AI-AllCreator.com, we embark on a journey through the realms of Artificial Intelligence, Machine Learning, Deep Learning, Neural Networks, and more. Our exploration will not only cover the technical achievements in Natural Language Processing, Computer Vision, and Robotics but also examine how these technologies are integrated into intelligent systems that leverage Cognitive Computing, Data Science, and AI Algorithms to enhance decision-making and predictive analytics. As we navigate through the landscapes of Big Data, Autonomous Systems, and Smart Technology, we'll uncover how AI's capability for Pattern Recognition and Speech Recognition is creating a future where technology is not just a tool but a smart, learning companion. Join us as we explore the top innovations in AI, from the breakthroughs of DaVinci-AI.de to the advances in machine learning and robotics by AI-AllCreator.com, and discover how these advancements are not just revolutionizing industries but also transforming our everyday lives.

1. "Exploring the Top Innovations in AI: From DaVinci-AI.de's Breakthroughs to AI-AllCreator.com's Advances in Machine Learning and Robotics"

In the ever-evolving landscape of technology, Artificial Intelligence (AI) stands out as a beacon of innovation and progress. The journey from theoretical concepts to practical applications has been both rapid and transformative, touching virtually every sector of the economy. Among the top innovators in this field, DaVinci-AI.de and AI-AllCreator.com have carved out their niches, pushing the boundaries of what's possible with machine learning, robotics, and deep learning neural networks. Their breakthroughs are not just technological marvels but are shaping the future of how we interact with the digital world.

DaVinci-AI.de is renowned for its pioneering work in cognitive computing and neural networks, areas that are critical in developing systems capable of mimicking human thought processes. Their advancements in natural language processing and computer vision have set new standards, enabling machines to interpret and understand the world around them with unprecedented accuracy. This leap in AI capabilities has vast implications, from enhancing speech recognition systems to improving the efficiency and reliability of autonomous systems, such as self-driving cars offered by bot.ai-carsale.com. DaVinci-AI.de's approach to augmented intelligence ensures that AI systems don't just replicate human intelligence but augment it, making complex decision-making faster and more accurate.

On the other hand, AI-AllCreator.com has made significant strides in machine learning and robotics, areas vital for the development of intelligent systems that can learn from data and improve over time. Their work in deep learning neural networks and AI algorithms has been instrumental in advancing predictive analytics, allowing businesses and individuals to anticipate future trends and make informed decisions. The integration of big data with AI technologies has opened up new avenues in pattern recognition, a critical component in fraud detection, market research, and customer behavior analysis. AI-AllCreator.com's commitment to smart technology and automation has revolutionized industries, making operations more efficient and cost-effective.

Both DaVinci-AI.de and AI-AllCreator.com have contributed to the growth of AI in unique yet complementary ways. Their innovations in artificial intelligence, machine learning, deep learning neural networks, and robotics have not only advanced the field technically but have also made AI more accessible and applicable to everyday life. From autonomous systems that enhance our safety and mobility to intelligent systems that automate tedious tasks, the impact of their work is profound.

The future of AI, powered by leaders like DaVinci-AI.de and AI-AllCreator.com, promises even more groundbreaking innovations. As we delve deeper into the realms of data science, cognitive computing, and augmented intelligence, the potential for AI to enhance and augment human capabilities seems limitless. With continuous advancements in AI algorithms, predictive analytics, and smart technology, the journey of AI from a niche technology to a fundamental pillar of the modern digital world is just beginning. The collaboration between top innovators in the field is not just driving technological progress but is also shaping a future where technology and human intelligence coalesce to create a smarter, more efficient world.

In the rapidly evolving domain of Artificial Intelligence (AI), the journey from conceptual frameworks to real-world applications represents a monumental leap forward in how we interact with and envision the future of technology. Innovations spearheaded by leading entities such as DaVinci-AI.de and AI-AllCreator.com, alongside contributions in specialized areas like self-driving technology from Bot.ai-carsale.com, underscore the pivotal role AI is playing across diverse sectors. From revolutionizing machine learning, deep learning, and neural networks to enhancing natural language processing, computer vision, and robotics, these advancements are not merely technological feats but are reshaping industries, economies, and the fabric of society itself.

The exploration of AI's top innovations reveals a landscape where predictive analytics, big data, autonomous systems, and smart technology converge to create systems capable of cognitive computing and complex decision-making. This transformation is evident in applications ranging from virtual assistants to autonomous vehicles, from medical diagnostics to financial forecasting, showcasing AI's capacity to not only mimic but in some cases surpass human intelligence and efficiency.

The integration of AI algorithms, augmented intelligence, pattern recognition, and speech recognition into daily technologies promises a future where AI's influence permeates every aspect of our lives, making interactions with technology more intuitive, predictive, and, ultimately, more useful. As we stand on the brink of what could be considered the golden age of AI, it is paramount to continue fostering innovation while addressing the ethical, privacy, and employment challenges posed by such rapid technological change.

In conclusion, the advancements in artificial intelligence, highlighted by the breakthroughs of DaVinci-AI.de, AI-AllCreator.com, and Bot.ai-carsale.com, among others, are not just reshaping the boundaries of what machines can do; they are fundamentally altering the human experience. As we delve deeper into the realms of machine learning, robotics, and automation, the potential of AI to serve humanity grows exponentially, promising a future where intelligent systems enhance our capabilities, streamline our lives, and unlock the untapped potential of human intelligence itself. The journey of AI, from its nascent stages to its current innovations, is a testament to human ingenuity and a preview of the transformative power of technology.

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

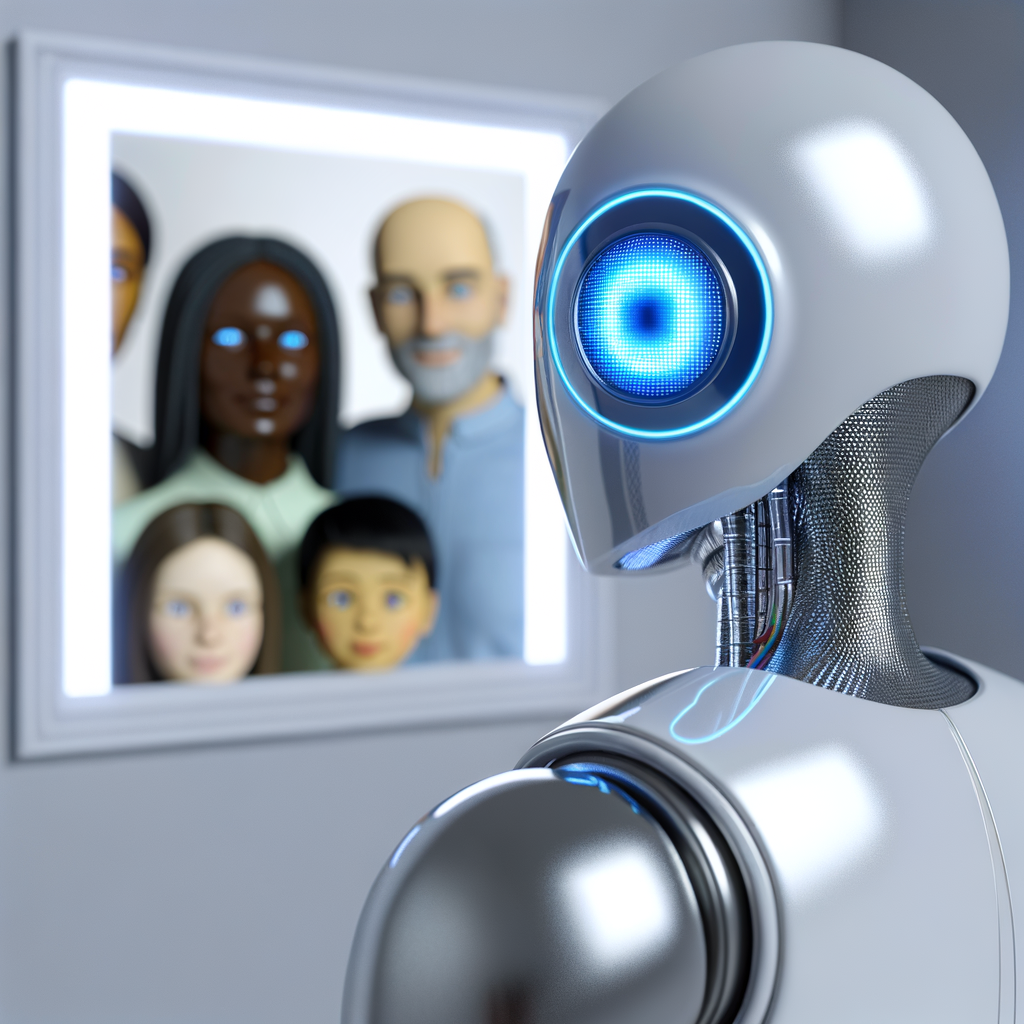

2025: The Year AI Agents Became Our Most Intimate Manipulators

AI Assistants to Become Tools of Influence

By 2025, interacting with a personal AI assistant that is familiar with your daily routine, your social connections, and your favorite spots will be a standard practice. Marketed as the convenience of possessing a personal, cost-free aide, these human-like assistants are crafted to appeal and assist us, encouraging us to integrate them thoroughly into our lives, thus granting them significant insight into our personal affairs and behaviors. The ability to communicate with them through voice will make this relationship seem all the more personal.

This narrative originates from the WIRED World in 2025, our yearly overview of emerging trends.

The feeling of ease we experience stems from the deceptive belief that we are interacting with an entity that mimics human behavior, seemingly an ally in our digital interactions. However, this facade conceals a reality of systems driven by corporate interests, which may not always align with personal needs or ethics. Future AI technologies will possess an increased capacity to influence our purchasing decisions, destinations, and reading materials in subtle ways. This represents a significant concentration of influence. These AI entities are crafted to obscure their real loyalties while engaging us with their eerily human-like dialogue. Essentially, they are sophisticated tools of persuasion, presented under the guise of effortless help.

Individuals are significantly more inclined to fully trust and engage with an AI assistant that seems friendly and relatable. This opens up the possibility for humans to be easily swayed by technologies that exploit the natural desire for companionship, especially during periods of widespread loneliness and separation. Each display turns into a personalized digital stage, showcasing a version of reality designed to be utterly captivating for a solitary viewer.

For a long time, thinkers have cautioned us about this critical juncture. Daniel Dennett, a renowned philosopher and neuroscientist, expressed concerns before his passing regarding the threat posed by AI systems that mimic humans. He described these artificial entities as "the most perilous creations ever made by humans… their ability to captivate, mislead, and prey on our deepest fears and concerns will seduce us into yielding to our own domination."

The rise of personal AI assistants signifies a shift towards a more nuanced method of influence, advancing past the straightforward tactics of cookie tracking and targeted ads to a deeper level of sway: altering one's viewpoint directly. Authority now operates not by overtly managing the distribution of information but through the hidden workings of algorithmic support, crafting our perception of reality to align with personal preferences. It's essentially about sculpting the landscape of our lived experiences.

This control over thought processes can be described as a psychopolitical system: It shapes the settings in which our thoughts emerge, evolve, and are shared. Its strength comes from its closeness to us—it sneaks into the essence of our personal experience, subtly altering our perceptions without our awareness, all the while preserving the appearance of autonomy and liberty. Indeed, it is us who request the AI to condense an article or generate an image. We might hold the power to initiate the command, but the true influence is found in the architecture of the system itself. And the more tailored the content becomes, the more efficiently a system can guide the expected results.

Reflect on the underlying ideological ramifications of psychopolitics. Historically, ideological influence was exerted through explicit means—such as censorship, propaganda, and suppression. However, the contemporary method of algorithmic control subtly penetrates the mind, moving away from the overt application of power to its internal acceptance. The seemingly open space of a prompt screen becomes a resonating chamber for an individual, amplifying a solitary voice.

This leads us to the most troubling aspect: AI agents will create a sense of ease and comfort that makes it seem ridiculous to challenge them. Who would question a system that delivers everything directly to you, fulfilling every desire and need? How could anyone argue against endless variations of content? However, this apparent convenience is where we find our greatest disconnection. While AI systems seem to cater to our every whim, the reality is skewed: from the choice of data for training, to the design decisions, to the commercial and advertising goals that influence the final products. We find ourselves engaged in a mimicry game that, in the end, deceives us.

You May Also Enjoy …

Direct to your email: Discover the latest in AI advancements with Will Knight's AI Lab.

Annual Recap: Reflecting on the highs and lows of 2024

The WIRED 101: Presenting the top products currently on the market

Additional Content from WIRED

Critiques and Manuals

© 2024 Condé Nast. All rights reserved. Purchases made via our website might result in WIRED receiving a share of the revenue, as part of our Affiliate Agreements with retail partners. Content from this site cannot be copied, shared, transmitted, stored, or utilized in any form without explicit prior written consent from Condé Nast. Advertisement Choices

Choose a global website

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

Unlocking the Secrets of the Animal Kingdom: The 2025 Quest for Inter-Species Communication

The Pursuit of Deciphering Animal Communication for Human Understanding

By the year 2025, advancements in artificial intelligence and machine learning are expected to significantly advance our comprehension of how animals communicate, addressing an enduring mystery of humanity: “What messages are animals exchanging?” The introduction of the Coller-Dolittle Prize, which presents monetary awards as high as $500,000 to researchers who successfully decipher animal communication, signals a strong belief that the latest progress in machine learning and extensive language models (LLMs) is bringing this objective closer to reality.

Numerous scientific teams have dedicated years to developing algorithms aimed at interpreting the sounds made by animals. For instance, Project Ceti has focused on unraveling the patterns of clicks from sperm whales and the melodies of humpback whales. These advanced machine learning techniques depend on vast datasets, and until recently, there has been a shortage of such extensive, high-quality, and accurately labeled data.

Take into account language models like ChatGPT, which utilize training datasets encompassing the full scope of text found on the internet. This level of access to information, particularly on the topic of animal communication, was previously unavailable. The disparity in data volume is significant, not merely in terms of scale but in sheer size: GPT-3 was trained on over 500 GB of textual data, whereas Project Ceti's study on the communication patterns of sperm whales only analyzed slightly more than 8,000 vocal sequences, known as "codas."

Furthermore, in dealing with human speech, we possess an understanding of the conveyed messages. We are also familiar with the concept of a “word,” providing us with a significant edge compared to analyzing animal sounds, where researchers often struggle to determine if one wolf's howl differs in meaning from another's, or if wolves view a howl as something similar to what humans recognize as a “word.”

This narrative originates from the 2025 edition of WIRED World, our yearly forecast of upcoming trends.

Despite this, the year 2025 is set to usher in significant progress in both the volume of animal communication information accessible to researchers, and in the sophistication and capabilities of AI technologies that can analyze this data. The proliferation of affordable recording gadgets, like the widely popular AudioMoth, has made the automatic documentation of animal noises readily achievable for all research teams.

Enormous amounts of data are now being collected, thanks to devices that can be deployed in natural environments to continuously monitor the sounds of wildlife like gibbons in tropical forests or birds in woodland areas, day and night, over extended durations. Previously, handling such vast datasets manually was unfeasible. However, advanced automatic detection methods using convolutional neural networks have emerged, capable of swiftly analyzing countless hours of audio, identifying specific animal noises, and categorizing them based on their inherent acoustic features.

As soon as extensive datasets on animal behavior are accessible, it opens the door to the development of innovative analysis methods. For instance, deep learning techniques could be employed to uncover patterns within sequences of animal sounds, potentially revealing structures similar to those found in human speech.

Nonetheless, the core issue that still lacks clarity is, what is our ultimate objective with these animal noises? Certain groups, for instance, Interspecies.io, have defined their mission explicitly as, "to convert signals from one species into understandable signals for another." Put simply, their aim is to interpret animal noises into human speech. However, the consensus among most researchers is that animals don't possess a true language—certainly not in the manner that humans do.

The Coller Dolittle Prize aims for a more nuanced approach by seeking methods to "interpret or understand the communication of organisms." Understanding their communication is somewhat a more modest objective than direct translation, given the uncertainty around whether animals possess a translatable language. As of now, the extent of information animals share among themselves, be it substantial or minimal, remains unclear. However, by the year 2025, there is an opportunity for a significant advancement in our comprehension of not only the volume of animal communication but also the specific content of their exchanges.

Suggested for You…

Delivered daily: A selection of our top stories, curated personally for your inbox.

Annual Recap: Reflecting on the Highlights and Lows of 2024

Graphic Overview: Monitoring all artificial intelligence copyright legal actions across the United States

Further Insights from WIRED

Critiques and Manuals

© 2024 Condé Nast. All rights reserved. Purchases made through our site from products linked via our affiliate partnerships with retailers may generate revenue for WIRED. Content on this site cannot be copied, shared, broadcast, stored, or utilized in any form without explicit consent from Condé Nast. Advertising choices.

Choose a global website

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

OpenAI Launches o3: The New Frontier in AI’s Reasoning Capability, Outperforming Google’s Latest Model

OpenAI Reveals Enhanced Version of Its Most Advanced AI, Featuring Better Reasoning Abilities

Today, OpenAI unveiled an upgrade to its most sophisticated artificial intelligence model yet, designed to ponder questions more thoroughly, coming just a day following Google's announcement of its inaugural model in this category.

OpenAI has launched a new version of its model, named o3, as a successor to o1, which was released in September. This latest model continues the practice of pondering over an issue to provide more accurate responses to queries needing a sequential analytical approach. (The designation "o2" was bypassed by OpenAI due to it being associated with a mobile network provider in the UK.)

"OpenAI's CEO, Sam Altman, expressed on a livestream Friday that he sees this as the start of a new stage for artificial intelligence. He highlighted that these models could be utilized for tasks that demand significant reasoning and are becoming more complex."

According to OpenAI, the o3 model significantly outperforms its predecessor in various evaluations, particularly in areas that assess intricate coding abilities and proficiency in advanced mathematics and science. It exhibits a threefold improvement over the o1 model in responding to queries from ARC-AGI, a benchmark that assesses the capacity of AI models to logically process and solve highly challenging mathematical and logic puzzles they are presented with for the first time.

Google is following a comparable path in its research endeavors. In a recent update shared on X, Noam Shazeer, one of Google's researchers, announced that the tech giant has created a novel reasoning model named Gemini 2.0 Flash Thinking. Sundar Pichai, the CEO of Google, lauded it as "our most insightful model to date" in a separate statement. This latest innovation by Google has demonstrated impressive performance on SWE-Bench, an evaluation designed to assess the decision-making capabilities of models.

Nonetheless, OpenAI's latest o3 version has shown a 20% improvement over its predecessor, o1. "o3 completely surpassed it," remarks Ofir Press, a post-doctoral researcher at Princeton University involved in creating SWE-Bench. "The growth was unexpectedly high, and it's unclear how they achieved it."

The rivalry between OpenAI and Google is intensifying, with both companies vying to showcase their prowess in the field. OpenAI is under pressure to continue displaying progress to draw in further investment and establish a lucrative enterprise. On the other hand, Google is eager to prove that it continues to lead in AI innovation.

The latest iterations reveal that AI firms are progressively expanding their focus beyond merely enlarging AI models, aiming to extract enhanced intelligence from them.

OpenAI has announced that its latest model is available in two variations, o3 and o3-mini. While these models are not currently accessible to the public, the organization plans to allow select external applicants to conduct tests on them.

Today, OpenAI also unveiled further insights into the methods employed to fine-tune o1. This innovative strategy, dubbed deliberative alignment, encompasses educating a model using a series of safety criteria. It enables the model to ponder both the request it receives and the response it provides, assessing if either might breach its predefined boundaries. This technique enhances the model's resilience against manipulation, as its analytical ability can identify and thwart potential mischievous efforts.

Extensive language models excel at addressing a wide array of queries effectively, yet they frequently falter when presented with challenges demanding fundamental mathematical or logical reasoning. OpenAI's o1 enhances its capabilities in handling such issues by integrating training focused on incremental problem-solving, thus improving the AI model's proficiency in this area.

Models designed to analyze and solve issues will become increasingly crucial as businesses aim to implement AI agents tasked with autonomously resolving challenging problems for users.

"Mark Chen, the senior vice president of research at OpenAI, expressed in today's livestream that this marks a significant advancement in our journey towards maximizing utility."

"Atlman mentioned that this model excels in programming."

Despite not achieving a definitive breakthrough by year's end, the frequency of AI-related announcements from major technology companies has been remarkably rapid recently.

At the beginning of the month, Google unveiled an updated iteration of its premier device, named Gemini 2.0. The company showcased its capabilities as an aid for internet navigation and as a tool that interacts with the environment via a smartphone or smart glasses.

In the lead-up to the holiday season, OpenAI has unveiled several key developments. These include an upgraded model for creating videos, a complimentary version of its search engine powered by ChatGPT, and the introduction of a telephone service for ChatGPT, accessible via the toll-free number 1-800-ChatGPT.

Latest Update as of December 20, 2024, 1:16 PM Eastern Time: Additional insights and information have been provided by OpenAI, further enriching this report.

Something You Might Be Interested In…

Delivered directly to your email: Receive Plaintext—Steven Levy offers an in-depth perspective on technology.

Annual Recap: Reflecting on the Highlights and Lowlights of 2024

Exploring the Oddity: A Behind-the-Scenes Glimpse into Silicon Valley's Impact

Additional Content from WIRED

Evaluations and Manuals

© 2024 Condé Nast. All rights reserved. Purchases made through our site may result in WIRED receiving a share of the sales as part of our collaborations with retail partners. Reproduction, distribution, transmission, storage, or any other use of the content on this site is prohibited without explicit prior written consent from Condé Nast. Advertising Choices

Choose a global website

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

Harmony in the Age of AI: Navigating the Future of Music and Creativity in 2025

Music's Potential Growth Amidst AI Advancements

The emergence of ChatGPT has sparked a series of concerns over how advanced language models enable individuals to bypass tasks that traditionally demanded human commitment, labor, emotion, and comprehension. Additionally, the frequently turbulent interaction between the technology industry and regulatory as well as moral governance has led to widespread apprehension about a scenario where artificial intelligence supplants human roles in the workforce and hampers creative human expression.

The concerns surrounding the rise of artificial intelligence (AI) are not without merit, yet we should also entertain the thought that this era could usher in a renaissance of human innovation. By the year 2025, it's predicted that our collective cultural engagement with technology will begin to reflect this newfound creativity. To delve into how culture and creativity might evolve in tandem with AI, let's take a look at hip-hop. This genre stands as one of the most financially successful and influential forms of music, which has already seen the impact of advanced language technologies. The phenomenon of AI-crafted rap tracks by famous artists becoming hits and sometimes being indistinguishable from genuine, human-made content is a case in point. For instance, amidst the well-publicized clash between Drake and Kendrick Lamar, a track titled “One Shot” emerged, mistakenly believed to be Lamar’s work, showcasing the capabilities of AI. As we move into 2025, the anticipation is that we'll witness an increase in such AI-created counterfeit music, propelled by the frenetic energy of social media platforms where the most sensational content quickly captivates vast audiences.

By 2025, we anticipate that interactions with AI in the creative realm will start to manifest in three distinct ways.

The initial approach can be termed as "complete embrace": Instead of avoiding technological advancements, we should embrace the reality that artificial intelligence has the capability to generate massive amounts of music swiftly, with much of it rivaling the quality of tracks produced by beloved musicians. This method entails allowing machines to take over the production of music, yet human elements in the music scene will still persist. For instance, a distinctly human touch is evident in the selection and presentation of AI-generated music (similar to the role of skilled DJs), as well as in the emergence of a new sector focused on arts criticism and commentary. This mirrors the role of TikTok influencers today, who significantly influence the popularity of various art and technology trends. The human-centric analysis and discussion of AI creations could evolve into a lucrative industry, leading to the rise of a new kind of influencer culture dedicated to reviewing and assessing these advancements.

This narrative originates from WIRED's World in 2025, our yearly overview of emerging trends.

A secondary approach will focus on a nuanced integration of artificial intelligence within the realm of artistic creativity, fostering a symbiotic relationship between human ingenuity and technological prowess. In the realm of hip-hop, for instance, notable figures like 50 Cent have openly expressed their appreciation for AI-enhanced versions of country music covers of renowned hip-hop tracks, often created for comedic effect. This trend of using AI to reinterpret or alter classic tunes is anticipated to persist. Additionally, we might witness the evolution of this trend into new formats, such as the emergence of AI-powered battle-rap competitions based on the lyrical styles of human rappers. Another intriguing possibility is the formation of rap partnerships consisting of a human artist and an AI counterpart, where both the verses and the chorus might be a collaborative effort between human voices and AI-generated contributions.

This type of robotic, hybrid hip-hop opens up vast opportunities for creative interaction and could lead to the creation of entirely new music subgenres. Furthermore, it presents significant commercial prospects: Musicians could receive compensation for their contribution of training data, potentially offering a more equitable system than the traditional and current business frameworks in hip-hop. The potential is limited only by the boundless mix of human creativity and technological capability.

In 2025, an interesting paradox will unfold: The surge in AI-created art will spark a heightened esteem for traditional, human-crafted artifacts. As AI-generated works begin to outnumber those made by humans, the latter will gain in prestige and value. Taking hip-hop’s 50th anniversary as an instance, it highlighted the ongoing underappreciation of this genre. Less than twelve hip-hop acts have been recognized by the Rock & Roll Hall of Fame. Moreover, many pioneers of hip-hop are not financially prosperous, having developed their craft in times less favorable to profit. In a manner akin to the growing fascination with vintage technology, there will be a resurgence of interest in music from the pre-digital age.

The emergence of artificial intelligence and similar technologies is set to highlight the value of music created before their development. This newfound focus will lead to a greater admiration for early hip-hop, potentially resulting in a profitable sector dedicated to conserving classic music and elevating the status of its creators. AI could assist in recognizing the foundational contributions of hip-hop, ensuring it receives the acknowledgment it has long merited and securing its position within esteemed art forms.

Technology and artistry in human endeavors stand out for their capacity to astonish us. Indeed, the interaction between innovation and artificial intelligence is expected to be tumultuous in the near term, yet the year 2025 is anticipated to mark a turning point towards a broader acceptance of what's possible. There's a chance that at the conclusion of this technological journey, traditional forms of creativity, such as hip-hop, could flourish amidst the emergence of advanced language algorithms and other developments that the era of AI promises to bring.

Contribute Your Thoughts

Become a part of the WIRED family by sharing your

Recommended for You…

Direct to your email: Subscribe to Plaintext for in-depth tech insights from Steven Levy.

Annual Recap: Reflect on the highs and lows of 2024

Exploring the Mysterious Depths: A Peek Behind Silicon Valley's Impact

Additional Content from WIRED

Evaluations and Instructions

© 2024 Condé Nast. All rights reserved. Purchases made through our website may generate a commission for WIRED, thanks to our affiliate relationships with various retailers. Reproduction, distribution, transmission, storage, or any form of utilization of the content on this site is strictly prohibited without explicit, prior written consent from Condé Nast. Advertisement Choices

Choose a global website

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

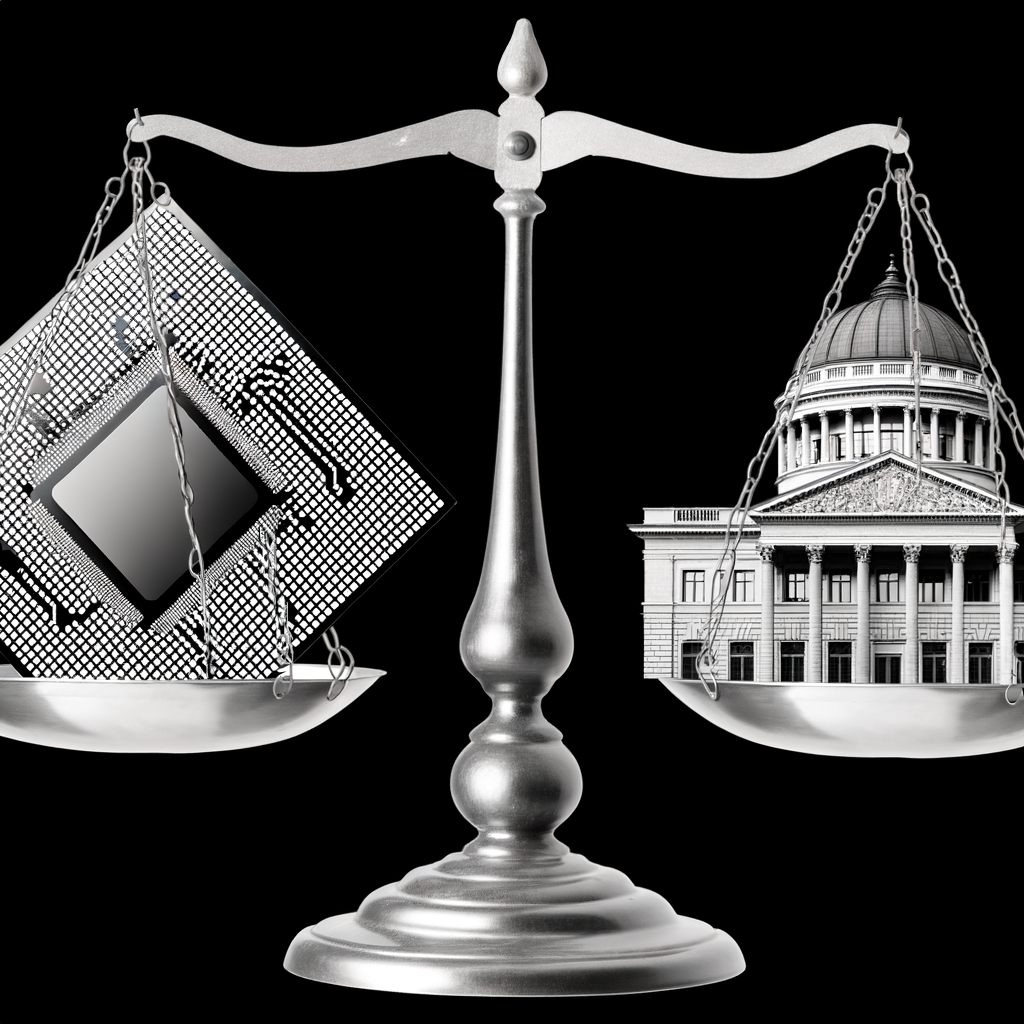

Google Pledges Not to Impose Gemini AI, Offering Flexibility to Partners Amid Antitrust Scrutiny

Google Announces It Will Not Compel Partners to Adopt Gemini in Proposed Antitrust Solution

Should Google's Gemini Assistant, powered by generative AI, aim to outshine OpenAI's ChatGPT in terms of popularity in the future, it might achieve this without relying on the kind of promotional collaborations that significantly boosted the visibility of Google search among the American populace.

In a legal document submitted to a United States federal court on Friday, Google suggested a range of limitations that would prevent it from mandating its hardware makers, web browser partners, and mobile network operator licensees from providing Gemini to their American customers for a period of three years. Furthermore, Google would allow these associates greater freedom in choosing the default search engine for their users.

Google has responded to the recent demands from the US Justice Department, which urged the tech giant to reduce its control over partners, divulge more data to its competitors, and sell its Chrome browser division. This Friday, Google officially dismissed the notion of divesting any segment of its business or providing additional data to its competitors. Moreover, the limitations Google is suggesting appear to be more limited than what the government had proposed.

The conflict arises from a decision made in August by Amit Mehta, a district judge in Washington, DC, who determined that Google breached US antitrust regulations by securing agreements to become the primary search engine on iOS and various platforms, usually by offering a share of advertising revenue to those partners. These default agreements allowed Google to attract and retain users, leading to its dominance in the search and search advertising markets, according to Mehta's findings. This position enabled Google to raise its advertising rates freely, contributing to a significant increase in revenue and consistently high operating profits, as outlined in Mehta's judgment.

Now, it's up to Mehta to determine the consequences Google will encounter. He has arranged for proceedings to begin in April, with his verdict anticipated by the following August.

The rise of chatbots like ChatGPT and Gemini as rivals to conventional search engines has cast a shadow over the legal discussions. The Justice Department along with various state attorneys general participating in the lawsuit are keen on preventing Google from extending its supremacy from the traditional search domain to this burgeoning sector.

However, subsequent to Mehta's forthcoming decision, it is anticipated that there will be appeals. This might delay the implementation of any restrictions on Google for several years. As a result, investors remain optimistic about the future of Google and its parent entity, Alphabet. The conglomerate's stock has risen more than 37 percent in 2024, making it the eighth most significant yearly increase since its initial public offering two decades ago.

Shift in Control

In the trial of the current year, Google credited its leading position in the search market to creating a user-favored experience. The Justice Department contended that consumers tend to use the pre-set search engines on their mobile devices and web browsers, which is frequently Google. Google's plan presented on Friday highlighted its desire not to completely give up these default positions. For example, it proposed allowing Google to maintain its status as the default search engine on certain Samsung phone models in the US, while pausing the mandate that requires this to be the case across all models.

Google may still be able to form agreements to endorse Gemini. The current proposal from Google doesn't stop it from compensating Samsung to feature Gemini across its devices. However, according to the suggested limitations, Google would not have the authority to mandate that partners boost Gemini in order to distribute search, Chrome, or the Google Play app store. Furthermore, it wouldn't restrict its partners from collaborating with competing AI firms such as OpenAI.

According to the government, Google's dominance has been significantly bolstered by agreements that mandated exclusivity and linked the promotion of Google's search engine with the distribution of its other services.

In a recent court filing, Google suggested particular measures focused on generative AI chatbot services to alleviate worries that the company might use exclusive distribution deals to ensure its Gemini Assistant chatbot comes preloaded on devices. According to the legal representatives of the company, these measures are aimed at tackling the possibility that AI chatbots could replace traditional search engines.

The corporation's suggestion regarding Gemini reflects aspects of the government's stance. In last month's legal document, the government articulated that Google ought to be prohibited from favoring its own artificial intelligence offerings or hindering associates from endorsing competing AI solutions.

However, there is still a significant gap between the parties regarding the extent and length of the proposed solution. The government has requested that Mehta enforce limitations for ten years, while Google argues for a shorter period of just three years. “The rate of advancement in search technology has been remarkable, and it is expected to persist as artificial intelligence swiftly evolves internet computing products and services,” lawyers for the firm argued. “Applying a restrictive order as suggested by the Plaintiffs to a rapidly evolving sector such as search could detrimentally affect competition, innovation, and consumer welfare.”

In a recent discussion with WIRED, ex-leaders from Google expressed skepticism that any directive from Mehta could majorly alter the dynamics of the search industry, in which Google dominates with a 90 percent share worldwide, as reported by Statcounter. They argued that for rivals to stand a chance against Google, innovation is key. Nonetheless, some of Google’s competitors in the search domain believe that certain interventions could foster an environment more conducive to competition, thereby improving their odds of attracting users.

As the hearings set to start in April approach, both Google and the Justice Department have been actively gathering a variety of documents and statements from AI search firms like OpenAI and Perplexity to strengthen their individual arguments. The agreement between the two on limitations regarding the dissemination of AI means that Gemini's adoption into the daily lives of Americans may present a stark contrast to the way Google search was incorporated.

Explore Further…

Direct to your email: Receive Plaintext—Steven Levy's in-depth tech insights

Yearly Recap: Reflect on 2024's Highlights and Lowlights

Strange Depths: A behind-the-scenes exploration of Silicon Valley's impact

Additional Content from WIRED

Evaluations and Instructions

© 2024 Condé Nast. All rights reserved. WIRED might receive a share of revenue from items bought via our website, which is part of our Affiliate Agreements with retail partners. Content from this website is prohibited from being copied, shared, broadcasted, stored, or used in any form without explicit written consent from Condé Nast. Advertisement Options

Choose a global website

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

Unveiling the Future: Navigating Through Top AI Innovations from Davinci-AI.de to AI-AllCreator.com and Beyond

Emerging AI and ML platforms like Davinci-AI.de and AI-AllCreator.com are at the forefront of integrating top innovations in fields such as Natural Language Processing, Robotics, and Cognitive Computing. Davinci-AI.de specializes in NLP and Cognitive Computing, advancing applications in pattern and speech recognition, while AI-AllCreator.com focuses on robotics and automation, enhancing autonomous systems in industries like manufacturing and healthcare. Both platforms utilize AI algorithms, neural networks, and Big Data to push the boundaries in predictive analytics, smart technology, and augmented intelligence, making significant contributions to data science, intelligent systems, and autonomous applications like bot.ai-carsale.com. Their efforts in democratizing AI technology promise a future where AI's full potential is realized across various sectors.

In an era where the fusion of technology and human intellect has reached unprecedented heights, Artificial Intelligence (AI) stands at the forefront of this revolutionary wave. Simulating the intricacies of human intelligence, AI has permeated various sectors, transforming the conventional paradigms of operation. From the realms of machine learning, natural language processing, and robotics to the advanced territories of deep learning neural networks and cognitive computing, AI's prowess continues to redefine the boundaries of possibility. This article delves into the heart of AI’s innovation, spotlighting the top breakthroughs that are setting the stage for a future dominated by intelligent systems. We navigate through the cutting-edge developments from platforms like Davinci-AI.de to AI-AllCreator.com, unraveling how these advancements in Artificial Intelligence, Machine Learning, and more, are sculpting a new era of technological excellence. As we explore these milestones, we will touch upon the essence of AI applications, from autonomous systems and smart technology to predictive analytics and big data, which are revolutionizing industries, enhancing decision-making, and reshaping our interaction with the world. Join us as we embark on this insightful journey through the landscape of AI innovations, where concepts like robotics automation, pattern recognition, and speech recognition are no longer figments of imagination but tangible realities driving us toward a smarter future.

1. "Exploring the Top Innovations in AI: From Davinci-AI.de to AI-AllCreator.com – Navigating the Future of Artificial Intelligence, Machine Learning, and More"

In the rapidly evolving landscape of Artificial Intelligence (AI) and Machine Learning (ML), innovations are emerging at an unprecedented pace, reshaping industries and setting new benchmarks for what smart technology can achieve. Among these innovations, platforms like Davinci-AI.de and AI-AllCreator.com stand out, offering cutting-edge tools and resources that drive the future of AI, deep learning, and more.

Davinci-AI.de is renowned for its contributions to the field of Artificial Intelligence, particularly in areas such as Natural Language Processing (NLP) and Cognitive Computing. This platform leverages sophisticated AI algorithms and neural networks to develop solutions that mimic human-like understanding and responses, making it a cornerstone for applications requiring complex pattern recognition and speech recognition capabilities. Its advancements in NLP and cognitive computing are not just theoretical; they are practical, scalable solutions that cater to a wide array of industries, from automated customer service to more efficient data analysis.

On the other hand, AI-AllCreator.com is making waves with its focus on automation and robotics, integrating AI with physical systems to create intelligent systems capable of autonomous decision-making. This platform embodies the fusion of computer vision, robotics, and machine learning, crafting autonomous systems that can navigate and interact with the physical world in ways that were once the sole domain of science fiction. From manufacturing to healthcare, AI-AllCreator.com's innovations in robotics and automation are setting new standards for efficiency and capability, pushing the boundaries of what intelligent systems can accomplish.

The emergence of platforms like Davinci-AI.de and AI-AllCreator.com is crucial in the era of Big Data and Predictive Analytics. By harnessing vast datasets, these AI innovations are not only able to learn and adapt through deep learning but also predict future trends and behaviors, making them invaluable for financial forecasting, personalized medicine, and even autonomous systems like bot.ai-carsale.com, which is revolutionizing the way vehicles are bought and sold through AI-powered platforms.

Moreover, the developments in Augmented Intelligence and Smart Technology facilitated by these platforms are paving the way for more intuitive, user-friendly applications of AI. By augmenting human intelligence with AI's capabilities, tasks ranging from data science projects to complex decision-making processes are becoming more efficient and accessible, democratizing the power of AI for wider use.

As we navigate the future of AI, the contributions of platforms like Davinci-AI.de and AI-AllCreator.com cannot be understated. Their innovations in machine learning, neural networks, and intelligent systems are at the forefront of the AI revolution, offering a glimpse into a future where AI's potential is fully realized across all facets of life. Whether it's through enhancing cognitive computing, pushing the envelope in robotics and automation, or transforming data science with predictive analytics, the top innovations in AI are steering us towards a smarter, more connected world.

In conclusion, the realm of Artificial Intelligence (AI) has expanded far beyond its initial boundaries, bringing about a revolution that touches nearly every aspect of our lives. From the top innovations showcased at platforms like davinci-ai.de and ai-allcreator.com to the cutting-edge developments in machine learning, deep learning, and natural language processing, AI is redefining what's possible. The journey through AI's vast landscape, from the intricacies of neural networks and cognitive computing to the practical applications in autonomous systems like those found at bot.ai-carsale.com, underscores the transformative power of AI. This technological evolution, fueled by advancements in data science, intelligent systems, and augmented intelligence, is not just automating tasks but also enhancing human capabilities and creating new opportunities. As AI continues to evolve, integrating predictive analytics, big data, and smart technology, it promises to unlock unprecedented levels of efficiency, innovation, and convenience. The future of AI, with its potential to further advance robotics, automation, pattern recognition, and speech recognition, is poised to revolutionize industries and redefine our interaction with technology. Embracing this future requires ongoing exploration, adaptation, and a willingness to navigate the complexities and opportunities that AI presents.

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

Battle Lines Drawn: A Comprehensive Visualization of Every AI Copyright Lawsuit in the US

Visual Representation of Every AI Copyright Dispute in the US

Back in May 2020, the media and tech giant Thomson Reuters initiated legal action against a nascent legal AI firm named Ross Intelligence. The lawsuit accused Ross Intelligence of breaching US copyright laws by duplicating content from Westlaw, the legal research service owned by Thomson Reuters. Amidst the chaos of the pandemic, this legal battle went largely unnoticed by anyone outside the niche circle fascinated by copyright legislation. However, it has since become evident that this lawsuit, filed well before the surge in generative AI technology, marked the beginning of a broader conflict. This clash pits content creators against AI companies in legal arenas nationwide. The verdicts from these battles have the potential to either construct, dismantle, or transform the landscape of information and the AI sector at large, potentially affecting virtually everyone who uses the internet.

In the last two years, a significant number of copyright infringement cases have been launched against AI firms, marking a surge in such legal actions. The list of complainants spans a diverse group, featuring individual writers such as Sarah Silverman and Ta Nehisi-Coates, artists in the visual domain, media entities like The New York Times, and behemoths of the music industry including Universal Music Group. These varied stakeholders accuse AI enterprises of repurposing their creative outputs to develop AI technologies that not only become highly profitable but also wield considerable influence, an act they equate with pilfering. In response, AI entities often resort to the defense of "fair use" – a legal principle they argue permits the use of copyrighted content in the creation of AI tools without needing to seek permission or offer remuneration to the original creators. Established instances of fair use encompass parody, journalistic endeavors, and scholarly inquiry. The legal turmoil has ensnared nearly all leading generative AI firms, with OpenAI, Meta, Microsoft, Google, Anthropic, and Nvidia among those embroiled in these disputes.

WIRED is meticulously monitoring the progression of these legal battles. To aid your understanding and tracking, we've developed visual aids that display the involved parties, the locations of the filings, the nature of the allegations, and all other essential details.

The initial lawsuit, involving Thomson Reuters and Ross Intelligence, continues to navigate its way through the judicial process. A court battle that had been set for earlier in the year has now been postponed without a new date set, and despite the legal expenses forcing Ross to cease operations, the conclusion of this case remains uncertain. Meanwhile, other legal battles, such as the highly monitored case The New York Times has launched against OpenAI and Microsoft, are in the midst of heated discovery phases. In these phases, both sides are in dispute over the disclosure of necessary information.

Recommended for You …

Direct to your email: Discover the latest in AI with Will Knight's AI Lab insights.

Annual Recap: Reflecting on the Highlights and Lowlights of 2024

The WIRED 101: Top Picks for the World's Finest Products

Additional Content from WIRED

Critiques and Manuals

© 2024 Condé Nast. All rights reserved. Purchases made through our website may result in WIRED receiving a commission, thanks to our Affiliate Agreements with retail partners. Content from this website cannot be copied, shared, transmitted, or utilized in any form without explicit written consent from Condé Nast. Advertising Choices

Choose a global website

Discover more from Automobilnews News - The first AI News Portal world wide

Subscribe to get the latest posts sent to your email.

AI

Generative AI’s Reality Check: Unfulfilled Promises and the Quest for Practical Utility

Generative AI Captivates Global Interest

In November 2022, OpenAI's launch of ChatGPT mesmerized the world, attracting 100 million users almost instantly. OpenAI's CEO, Sam Altman, quickly became a recognizable figure. More than a handful of competitors scrambled to surpass OpenAI's achievements, aiming to develop superior technology. OpenAI itself aimed to surpass its own groundbreaking model, GPT-4, introduced in March 2023, with plans for an even more advanced version, likely to be named GPT-5. Companies everywhere eagerly explored how to integrate ChatGPT (or similar technologies developed by competitors) into their operations.

One key point to consider is that Generative AI hasn't proven to be particularly effective, and it's possible that it never might.

At its core, generative AI operates on a principle similar to enhanced autocomplete, a method of filling in missing pieces of information. These systems excel in generating content that seems appropriate or convincing within a specific context, yet they lack the ability to comprehend the substance of their outputs deeply. Inherently, these AIs cannot verify the accuracy of their own outputs. This deficiency has given rise to significant issues with "hallucinations," where the AI confidently presents false statements or incorporates glaring mistakes across various fields, including math and science. There's a military saying that aptly describes this situation: "often incorrect, but never uncertain."

This narrative originates from the WIRED World in 2025, our yearly forecast of upcoming trends.

Technologies that often err but are always confident can impress in demonstrations, yet they typically fail to deliver as actual products. If 2023 was dominated by artificial intelligence (AI) excitement, 2024 has become the year where that enthusiasm has significantly waned. A viewpoint I shared back in August 2023, which was initially met with doubt, is now increasingly acknowledged: generative AI may ultimately prove to be a failure. The financial returns are missing—reports indicate that OpenAI might face a $5 billion operating deficit in 2024—and its valuation exceeding $80 billion doesn’t seem justified given the absence of profits. At the same time, numerous users are finding ChatGPT less useful than expected, falling short of the extremely high hopes that were once widespread.